Uber’s on-call engineers facilitate seamless experiences for riders and drivers worldwide by maintaining the 24/7 reliability of our apps. To execute on this reliability, however, we need to ensure that our on-call teams are set up for success.

Until January 2016, our on-call toolbox was dispersed across several systems, making it difficult for engineers to respond to alerts quickly and efficiently. Compounding this was the inability of the tracking solution we were using at the time—email reports—to effectively relay contextual information about previous shifts to onboarding on-call engineers. We needed a solution that would centralize our on-call toolbox and provide the next on-call engineer with a more thorough picture of the state of the system at handoff.

To architect a better on-call experience, our Site Reliability Engineering (SRE) team built a versatile new tool for real-time incident response, shift maintenance, and post-mortem analysis—all key components of a dynamic and responsive on-call system. Currently used by hundreds of teams across Uber, On-Call Dashboard incorporates annotations, runbooks, built-in response actions, and a standardized signal-to-noise ratio survey that makes it easier to interpret and address important alerts. The solution also tracks and visualizes metrics through detailed analytics that enable engineers to better understand the state of the system they are inheriting.

In this article, we introduce On-Call Dashboard by outlining its flagship features and share how engineering teams at Uber use this powerful tool.

All things on-call, all on one screen

While designing our new dashboard, we wanted to improve the user experience for on-call engineers by displaying all the necessary contextual information about a given shift on one screen and eliminating the need to switch between many tools.

Below, we discuss several key features of this dashboard:

- Annotations. One of the most valuable components of On-Call Dashboard is the ability to annotate alerts. Annotations enable engineers to offer written accounts of an alert’s context and corresponding actions taken by the on-call engineer. Annotations are non-automated accounts of the alert’s context and actions taken; when consistently delivered, they make it easier for future on-call engineers to solve recurring alerts.

- Runbook. Reviewing a runbook every time a corresponding alert goes off can be tedious. Imagine a perfect world in which every alert and its associated runbook was only a click away! On-Call Dashboard makes this possible.

- Built-in response actions. To more efficiently act on annotations, we also needed to incorporate built-in response actions that acknowledge and resolve alerts. Since the source of truth for all alerts are outside of On-Call Dashboard, we must be able to proxy the actions on their state to their respective services. Once proxied, we can actually improve these services. By importing service ownership information through built-in response actions, we brokered closer mapping between broken services and the people who can fix them. This ensures the team responsible for maintaining the service will be notified regardless of possible ownership transfers.

Sorting signals from the noise

Noisy alerts are a hefty toll for everyone who “hears” them. Non-actionable or more trivial alerts are distracting and waste valuable engineering time that could be better spent elsewhere. If the pattern for given alert occurrences is “this is not important,” the possibility of human error increases when something really does go off the rail.

Since On-Call Dashboard incorporates annotations for collecting feedback about alerts, we also leveraged it for indicating noise. We needed to standardize a single tagging scheme for all teams, so we came up with a two-question signal-to-noise ratio (SNR) survey tied to annotation to help our engineers cut through the noise.

After polling different teams, we realized that SNR cannot be contained to a single dimension: it needed to measure both the accuracy (e.g., whether or not a service is broken) and actionability (e.g., a service under a specific team’s purview is broken and they need to fix it).

However, these metrics are meaningless if the survey is not mandatory. If our customers annotate alerts with our SNR survey in mind, the On-Call Dashboard team can best track the efficacy of our monitoring system for each user. Once we have this data, we can then work with teams to improve their SNR results moving forward.

The SNR survey helps engineers—and particularly, managers—keep track of their on-call rotations, as well as enables our team to build on-call solutions that better serve our users.

Categorizing SNR alerts

To make it easier for on-call engineers to annotate, SNR alerts fall into six categories based on actionability and accuracy, outlined in the grid below:

Not surprisingly, teams target their alerts to fall into the green zone (at the intersection of actionable and accurate) as much as possible and the solid red zone (unactionable and unknown accuracy, indicating serious alerting issues) as rarely as possible. We use the following three metrics to judge SNR level: percent SNR annotated, percent SNR accurate, and percent SNR actionable.

SNR survey results are saved along with other alert properties for further analysis, and are incorporated into the On-Call Dashboard statistics and shift report emails.

Measuring shift quality

Another major consideration while designing On-Call Dashboard was how we could use the tool to not only monitor alerts and SNR, but also ensure that on-call loads were distributed evenly among teams. To to do this successfully, we had to quantify and track current sentiment about on-call responsibilities both for individual teams and for our organization as a whole.

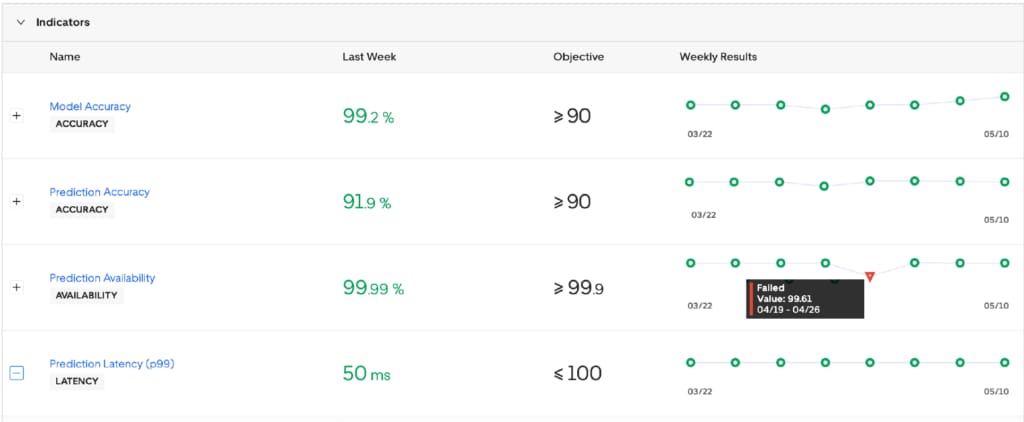

To address this, we developed a generic equation encompassing various on-call shift metrics. For our use case, the output of the function is two numbers, representing on-call shift load and the quality of on-call engineer actions. Load, quality, and their corresponding metrics are intended to be used as KPIs to measure the on-call experience against targeted goals and progress over time. The initial implementation computes shift load from the following metrics: quantity of alerts, disturbance, runbooks, and annotations, as well as the signal-to-noise ratio and absence of orphaned alerts. Below, we take a closer look at each of these metrics:

-

- Alerts. The Alerts metric is based on alert count. For instance, low urgency alerts are considered less important than high urgency alerts, and manually-created or escalated alerts are more time-intensive to solve. The first few alerts are ignored, followed by a sharp, but decaying impact to the overall shift load score.

- Disturbance. The Disturbance score indicates on-call action distribution. The Disturbance effect increases if an engineer is alerted continuously during the shift. To evaluate this, we deduct fifteen minutes for every alert from the engineer’s productive time.

- Runbooks. Each alert contains a runbook and a runbook quality score assigned by on-call engineers on previous shifts. The Runbooks metric is simply the mean value of runbook scores subtracted from 100 percent. A zero percent Poor Runbooks metric means all runbook scores were five stars across all alerts during that shift.

- Annotations and SNR. The Annotations and SNR metrics represent the fraction of annotated and SNR-tagged alerts, respectively. A high annotation and tagging rate indicates that more complete information about the shift is provided at hand-off and collected for future analysis.

- Orphaned Alerts. Orphaned alerts, meaning alerts that are still open by the end of the shift but are not annotated, indicate poor on-call engagement and increased workload during the next shift. The Orphaned Alerts metric measures shift quality similarly to the Alert metric, decreasing exponentially after the first few alerts.

Individual components comprising the load and quality values along with individual on-call shift metrics are included in shift reports shared with the next on-call engineers. These shift reports are essentially a snapshot of the on-call situation at the end of a given shift, including an alert timeline, depicted in Figure 9 below:

The reports include alert timeline, a compacted list of all alerts, their annotations, and a free-form summary account of the engineer leaving the duty. Summarized stats and quality numbers are included in this report as well.

Analytics

In addition to tracking specific alerts, SNR, and metrics, On-Call Dashboard also incorporates detailed analytics that depict this on-call data to improve processes and, ultimately, the overall on-call experience for our engineers. Our analytics solution is based on Elasticsearch and Kibana frameworks (used widely across our tech stack), as Kibana’s table, line, column charts and big number visualizations are an ideal solution for our size and scale.

Each alert is stored in Elasticsearch as a separate document containing alert details such as alert handling times, annotations, and alert source, with a total of over seventy attributes. A shift document containing multiple numeric fields used for aggregation of shift-specific data is also posted to Elasticsearch immediately after the end of each shift. A summary of shift activity is composed of over ninety attributes, including annotation statistics, SNR-annotation statistics, and alert duration statistics, among others. In addition to weekly, monthly, and yearly dashboards, on-call teams can create customized on-call summaries to display team-specific data.

Figures 10-13, below, outline a few visualization use cases accessible through On-Call Dashboard:

Now that we have introduced all of these features, let us take a look at how On-Call Dashboard has been applied to improve the experiences of on-call engineers from our Schemaless team.

From data to action: Schemaless use case

Schemaless is Uber Engineering’s scalable, MySQL-powered datastore that has enabled us to grow our infrastructure at scale. Given the sheer scope of Schemaless, on-call engineers are critical to ensuring a seamless user experience for others using the datastore.

Accessing easy-to-interpret, actionable metrics through On-Call Dashboard was the first big win for this team. On the left side of the chart in Figure 14, below, we depict their initial harvest of the low-hanging fruit of our all-in-one on-call monitoring and reporting system: measuring alerts:

The second major boon for the Schemaless team was the ability to annotate these alerts. As depicted in Figure 14, as soon as the amount of annotated alerts approaches 100 percent, the total number of alerts begins to steadily decline, suggesting that once the root cause of an alert (or group of alerts) is identified, it can be resolved. Annotations help the weekly stats update, as the team now has access to detailed information about the cause and corresponding actions taken in response to alert.

The Schemaless team can share these insights with other teams that might be receiving similar alerts while on-call. Additionally, On-Call Dashboard analytics visualizations also enable the team to identify alerts that occurred more frequently than others so that they could concentrate on them.

To get the most from this solution, the team displayed On-Call Dashboard on a large monitor visible to all team members during the workday, making it easy to collaboratively monitor the health of the service at all times. By keeping tabs on the dashboard even during off-shift hours, team members became aware of what is going on with their system at all hours of the day, fostering greater collaboration and team-wide ownership of the on-call process. This sense of collaboration increased empathy among team members who now understand the full extent of on-call demands during off hours.

A more seamless on-call experience for everyone

On-Call Dashboard is currently used by hundreds of teams across Uber to facilitate more seamless experiences for our on-call engineers. As Uber’s services continue to grow at scale, this new tool and other SRE solutions will ensure that these services run like clockwork at all hours of the day. If architecting systems to boost engineer productivity and improve the on-call experience appeals to you, consider applying for a role on our team!

Vytautas Saltenis and Marijonas Petrauskas are software engineers, and Edvinas Vyzas is an engineering manager. All three are based in Uber’s Vilnius, Lithuania office.

Edvinas Vyzas

Edvinas Vyzas is an engineering manager on Uber's Observability Engineering team.

Marijonas Petrauskas

Matrijonas Patrauskas is a senior software engineer on Uber's Compute Platform team.

Posted by Vytautas Saltenis, Edvinas Vyzas, Marijonas Petrauskas

Related articles

How LedgerStore Supports Trillions of Indexes at Uber

April 4 / Global

Most popular

Jupiter: Config Driven Adtech Batch Ingestion Platform

Uber Rider x Miami HEAT Ticket Sweepstakes OFFICIAL RULES

A beginner’s guide to Uber vouchers for transit agency riders

Uber Earner x Miami HEAT Ticket Sweepstakes OFFICIAL RULES

Products

Company