Denial By DNS: Uber’s Open Source Tool for Preventing Resource Exhaustion by DNS Outages

December 5, 2017 / Global

In June 2016, an unresponsive third-party Domain Name System (DNS) server caused an outage of a legacy login service, affecting riders and drivers trying to access the Uber app. While the issue was mitigated in minutes, discovering why this happened was far more challenging. As part of Uber’s commitment to architecting stable and reliable transportation solutions, our engineering teams work hard to prevent, respond, and mitigate outages that stand in the way of a seamless user experience.

In this article, we strive to answer the question: how can a third-party service degrade data center-local (or even host-local) connectivity of a web service? While this login service has been deprecated, our experience led us to create a new solution for identifying and preventing these types of outages. Read on to learn how an unresponsive Node.js-DNS server interaction caused a service outage, walk through a brief history of request handling, and meet Denial By DNS, our open source solution for preventing unintentional denial-of-service (DOS) by DNS outages.

Technical background: DNS

Most engineers associate DoS by DNS with cyberattacks, but some runtimes are also vulnerable to resource exhaustion in the presence of DNS slowdowns. More specifically, using external APIs can impact a service’s critical internal calls.

Below, we offer some background on domain name resolution on Linux, Uber’s current infrastructure operating system, and threading models for web servers to contextualize our use case:

Using DNS on Linux

At a high level, the DNS is responsible for resolving domain names to machine-understandable IP addresses. These can be internet-wide (like uber.com), network-local (e.g., openwrt.lan might resolve to a router in the current home network), or host-local (like localhost could resolve to either ::1 or 127.0.0.1).

Resolving an external domain requires calling external DNS servers, which can take much longer than resolving localhost. However, the interface on Linux is the same for resolving uber.com and localhost: libc call getaddrinfo(3). Its signature is:

int getaddrinfo(const char *node, const char *service,

const struct addrinfo *hints,

struct addrinfo **res);

As one can see from the function signature and the manual, the call is synchronous: there is no way to ask the DNS to “resolve this name and get back to me when complete.”

Request handling with processes and threads

Now that we understand how DNS queries are resolved in a libc-based implementation, let us discuss the three types of threading models to better grasp how the interaction between Node.js (the runtime with which we built our login service) and a third party service caused an outage.

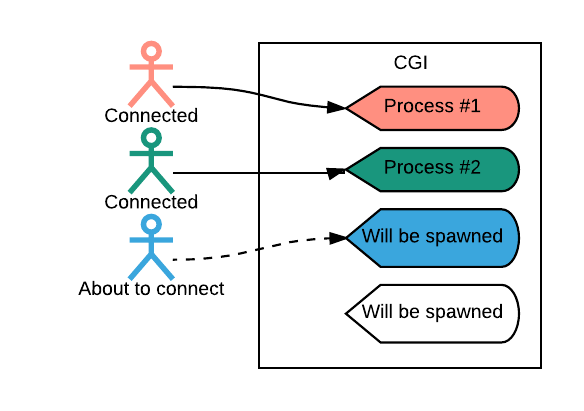

CGI model

Back in the early 1990s, incoming web requests were handled by forking off a child process. Dynamic content (i.e., non-static content) was handled via common gateway interface (CGI), as depicted in Figure 1, below:

This model clearly separated application code (e.g., in Tcl, Perl, or C) from web server code (such as Apache), with an easy-to-understand interface between the two. As a result, this model was attractive to developers. CGI worked well then since less people used the Internet, and programming languages did not need first-class support for Hypertext Transfer Protocol (HTTP), which offered more language options for building web applications.

However, as the Internet grew in popularity, engineers realized that starting and shutting down processes at rapid speed is too memory and CPU-intensive to be sustainable. To save hardware and electricity resources, the industry had to develop better ways of handling incoming user requests.

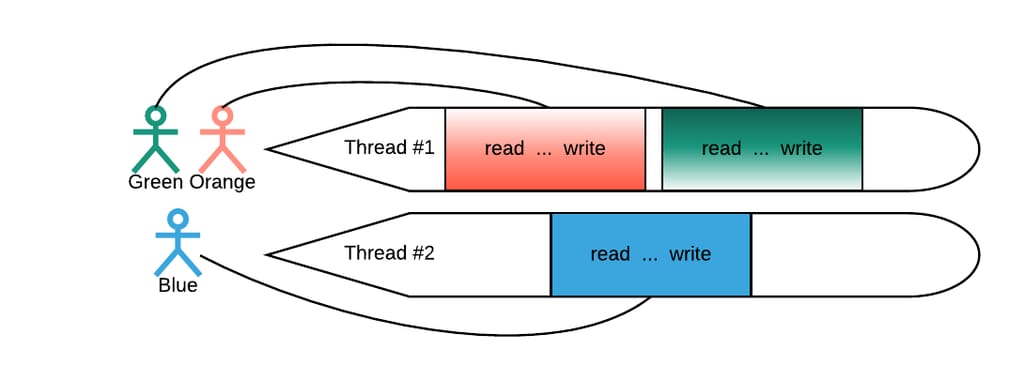

Thread pool (or 1:M) model

Given that the expensive part of managing incoming requests is spawning processes, the intuitive solution is to reuse processes for multiple users. That means that at any given time, a fixed number of processes waits to handle the request.

While process pooling is effective, most runtimes choose to implement thread pools. A thread pool is a fixed number of threads handling the user request from beginning to the end, as depicted in Figure 2, below:

To serve a request, the application needs to read (read the incoming user’s query), execute the business logic (as portrayed by the three dots in Figure 2), and write the result back to the user. A scheduling example with three users and two threads is highlighted in Figure 2, above, in which a user “owns” the thread throughout the request process. With one thread serving M customers at a time, the model is called 1:M.

Now that we understand the thread pool model, how do we actually configure it? A CPU core can run a single thread at a time, so it is common to have at least N threads in the thread pool, where N is the number of available cores.

The 1:M model is still a popular approach in modern web technologies. However, to squeeze out the last server’s CPU cycles, we turned to another request handling approach: the M:N model.

M:N model

To deliver a user’s request, services frequently require additional information from a local network or disk. For example, in Uber’s former login service, every request spawned a new request to the user or third-party service. During that time, the thread handling the request waits for a reply from the network before it acts. This was exactly the case for our login service; computation was minimal and most time was spent waiting for a reply from other services.

Since a CPU core can run only one thread at a time, it is common to “oversubscribe” threads. That way, threads that are waiting for an outside reply are taken off (descheduled by) the CPU, and only threads that are doing work are scheduled. On standard web applications that use thread pools, a significant portion of time is spent waiting for external requests. To keep the CPU busy, there needs to be a much larger number of threads than there are cores.

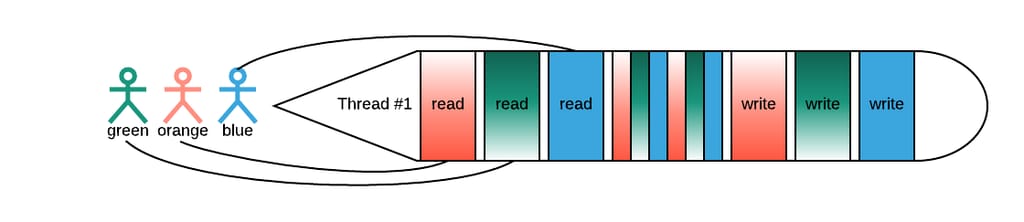

Context switches—in other words, descheduling the thread from a CPU core and scheduling a new one—can be expensive. (For more on this topic, check out “How long does it take to make a context switch?”). At scale, context switches are so resource-intensive that engineers invented a completely different approach: M:N scheduling, depicted below:

As illustrated in Figure 3, the thread needs to read the user’s request, then execute some processing (represented in the figure above by narrow stripes of color), and finally write. With M:N scheduling, the thread does a little bit of work for each user simultaneously as opposed to handling one at a time.

In the M:N threading model, the thread processes the request of the user whose input is readily available at the time. Once information for a second (third, fourth, and so on) user is fetched from the network or disk, the given user’s input is scheduled back to the thread and continued until another external call or completion (write).

In this scenario, there can be M threads handling short bursts of user computation, and N cores on which these are scheduled, lending to its name of M:N. Since CPU cores are not blocked on external calls, it is common for M=N.

Since threads are not blocked by user input, CPU cores are always doing work, but because there are not many threads, context switches are rare.

For Uber’s login service, the M:N model worked well until we began experiencing negative interactions between DNS and user M:N scheduling in Node.js.

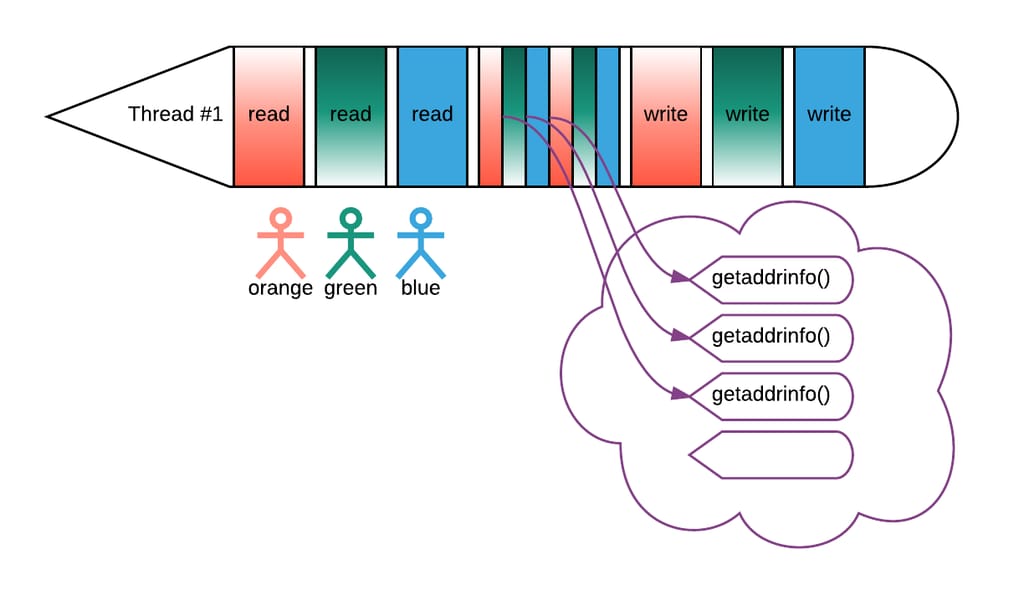

DNS in M:N scheduling

The M:N model works only when application code can clearly state “I am waiting for a network reply right now, feel free to deschedule me.” Behind the scenes, this translates to a select(2) (or equivalent) on multiple sockets. However, asynchronous I/O and DNS calls do not generally exhibit fast and reliable behavior.

A time-intensive synchronous call will block the thread and manifest the same problem as the 1:M thread pools above: a halted thread waiting for a reply from an external entity doing no work.

To avoid this, it is possible to dispatch the synchronous system calls to a separate thread pool:

In this scenario, synchronous system calls executing on dedicated threads no longer block the main ones. Usually DNS and I/O does not happen as frequently as calling external services, so the per-request cost of context switching decreases.

In many ways, M:N scheduling with dedicated thread pools offered the ideal solution for Uber’s login service since main threads are always doing work and not competing for CPUs. In contrast, a thread pool for expensive synchronous calls offload slow operations on the main threads.

With knowledge of DNS resolution on Linux, thread pools, M:N scheduling, and synchronous system calls in our back pockets, let us now discuss how this technical combination led to a login service outage.

Analyzing our login service outage

In summer 2016, an unresponsive third-party DNS server caused an Uber login service outage that affected some riders and drivers trying to log onto the app. For a very short period of time, a large portion of our users were not able to log in with either an external provider or manually inputting their username and password combinations.

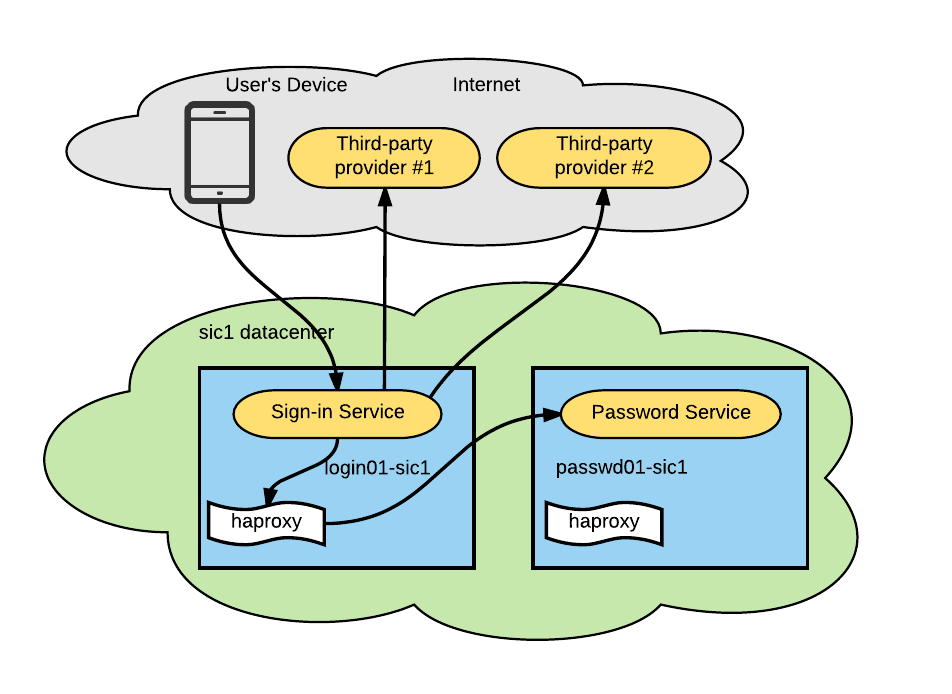

Our login service communicated with other services using the standard Node.js HTTP client. To communicate with other services inside the same data center, the login service connected to a router agent haproxy on localhost (also known as a sidecar pattern). The router agent then proxied the request to an appropriate dependency (in our case, a password service).

The service architecture was composed of:

- A sign-in service (on HTTP) responsible for accepting a username/password combination and returning a session token.

- A password service which determines whether the pair of credentials are correct.

- A third-party provider which enables additional login methods.

The combination of these three pieces led to our outage. We outline its progression, below:

- DNS queries to the third-party provider started taking longer than usual. Initially, we assumed that this was because of a misconfigured third-party DNS server out of Uber’s control.

- Then, the login service was unable to reach not just these providers, but also the internal password service and all services inside the network, even on localhost.

- Around the same time, we confirmed an outage of one of our providers. A DNS query to its endpoint confirmed that the DNS response times jumped up from tens of milliseconds to tens of seconds.

During the outage, we noticed that login in one of our data centers was not affected, and we were able to mitigate the outage within minutes by moving customer traffic to a non-impacted data center. However, were still confused as to why the outage occurred in the first place.

Root cause analysis

Resolving localhost to ::1 (which is needed to connect to the local sidecar) involves calling a synchronous getaddrinfo(3). This operation is done in a dedicated thread pool (with a default of size 4 in Node.js). We discovered that these long DNS responses made it impossible for the thread pool to quickly serve localhost to ::1 conversions.

As a result, none of our DNS queries went through (even for localhost), meaning that our login service was not able to communicate with the local sidecar to test username and password combinations, nor call other providers. From the Uber app perspective, none of the login methods worked, and the user was unable to access the app.

To prevent this from happening in the future at Uber and elsewhere, we created an open source solution to test whether or not a service’s language is affected by this type of DNS interaction, as well as put together a series of precautionary measures for avoiding this type of outage, both of which are introduced in our next section.

Our solution: Denial By DNS

After conducting research on how our DNS scheduling caused this outage, we decided to contribute back some of our findings to the open source community via Denial by DNS, our solution to test whether or not your service’s language is susceptible to a DoS by DNS outage.

To test if your language is affected, write a program which follows these simple steps:

- Call http://localhost:8080. This call should always work; failure to do so means that there is an error in the test.

- Call http://example.org N times in parallel; do not wait for the result. N is usually slightly more than the default thread pool size.

- Wait a few seconds to make sure all calls are scheduled.

- Call http://localhost:8080. This call will succeed if the application is not vulnerable.

Scripts check the number of times http://localhost:8080 is called:

- 0: there is an error with the setup, as the script should succeed at least once.

- 1: the application is vulnerable, in other words, the first invocation succeeded while the second failed.

- 2: the application is not vulnerable.

As part of this exercise, we tested services in erlang, Go, Node.js, and Tornado environments to determine whether M:N scheduling is “safe” (meaning, it does not cause DNS reliability issues) across these runtimes:

| Name | Comment | Safe |

| erlang-httpc | Erlang 20 with inets httpc | unsafe |

| golang-http | Golang 1.9 with ‘net/http’ from stdlib | safe |

| nodejs-http | Node 8.5 with ‘http’ from stdlib | unsafe |

| python3-tornado | Python 3.5.3 with Tornado 4.4.3 | unsafe |

With our new tool, all you need to is run a simple test to determine whether or not your runtime is affected by M:N scheduling.

Beyond using Denial by DNS, there are a few other tactics that can be taken to avoid potentially precarious language-DNS interactions:

Avoid getaddrinfo() system calls entirely

To prevent blocking runtime with getaddrinfo(3), one can resolve DNS addresses by avoiding the call entirely. For example, node-dns is a DNS resolver written in pure JavaScript, which avoids this problem. Note that non-libc implementations might return different results than native calls.

Replace well-known domains with IP addresses

To keep the service connected to local systems (e.g., via a sidecar on localhost), one can replace localhost with ::1 or 127.0.0.1. This change eases symptoms of resource exhaustion; while external outgoing traffic that depends on DNS will be blocked, the preconfigured internal endpoints will keep functioning.

Still, this is not a complete solution to the problem; it only helps when the subset of the outgoing calls (in our case, within the same data center) do not depend on DNS.

Use a non-affected language

During our post-mortem research, we tested a few runtimes with M:N scheduling, and verified that some of them are unaffected. While rewriting existing services to an unaffected runtime may be a heavy ask for preventing this issue, it might be a worth considering before developing a new system that will interface with third-party services.

Other takeaways

While our incident mitigation was quick and successful, data center failover is considered to be the last resort. After our outage analysis, we added a more lightweight mitigation technique should this happen again: a runtime configuration flag to disable functionality that depends on external services. With a few clicks, we can disable any of our third-party providers and mitigate the problem for the remainder of users without moving all of our traffic to another data center.

Next steps

Playing detective to discover the root cause of a DoS by DNS outage enabled us to create a more stable environment for facilitating better experiences on Uber’s apps, regardless of how users choose to access them. We hope that you find our test (and advice) for handling this type of outage useful for your own projects!

If you are using a runtime with M:N scheduling, we encourage you to apply our test to determine whether or not your runtime is affected and submit a pull request to our repository so others can benefit, too.

If troubleshooting web services at scale appeals to you, consider applying for a role on our team.

At the time of the outage, Motiejus Jakštys was a software engineer on Uber’s Marketplace team, the group that maintains Ringpop. Currently, he is a software engineer on Uber’s Foundations Platform team based in Vilnius, Lithuania.

Photo Header Credit, “Gentoo penguin defending chick from skua,” by Conor Myhrvold, Sea Lion Island, Falkland Islands, January 2007.

Motiejus Jakštys

Motiejus Jakštys is a Staff Engineer at Uber based in Vilnius. His professional interests are systems programming and cartography. Motiejus is passionate about writing software that consumes as little resources as necessary.

Posted by Motiejus Jakštys

Related articles

Most popular

Migrating Large-Scale Interactive Compute Workloads to Kubernetes Without Disruption

How Uber Migrated from Hive to Spark SQL for ETL Workloads

We’re giving away up to 25,000 Marriott Bonvoy® eGift Cards to Uber One Members!