Introduction

At Uber, scale and reliability define our infrastructure. Every new server type, kernel upgrade, and configuration change must be rigorously vetted before it touches production. Historically, this qualification process was manual and time-consuming, forcing engineers to stitch together ad hoc benchmarks with limited ability to measure efficiency or ROI. This created delays, added risk, and slowed the adoption of new technologies.

To close this gap, we built Ceilometer—an adaptive benchmarking framework that delivers fast, production-like signals on system performance. Ceilometer empowers us to qualify new cloud SKUs, validate infrastructure changes, and evaluate efficiency initiatives with confidence. It’s also central to testing next-generation skus and new machine learning frameworks before large-scale rollout. Beyond raw performance, Ceilometer ensures reliability by testing system stability under prolonged usage and stress. From catching Redis® regressions to validating database applications, and benchmarking stateless microservices, Ceilometer has become an essential part of Uber’s engineering life cycle. It ensures that our infrastructure remains performant, reliable, and cost-efficient as we scale into the future.

Ceilometer: An Adaptive Benchmarking Platform

Ceilometer is our platform for performance and reliability testing, built to run synthetic benchmarks as well as simulate and benchmark production workloads across stateful and stateless services. It automates benchmarking, collects detailed performance metrics, and provides actionable insights on resource utilization, cost efficiency, and service reliability. By integrating with existing internal tools to automate the bring-up of benchmark clusters and deploy Uber services on target hosts, Ceilometer enables engineers to launch tests, visualize results, and quickly identify performance differences or reliability risks. This approach empowers teams to make faster, more informed decisions, optimize resource allocation, and reduce operational overhead—all while lowering the risk of production incidents.

Motivation

Benchmarking is a critical aspect of understanding and improving technology performance, yet it often comes with a unique set of challenges. Before the introduction of Ceilometer, performance engineers faced significant hurdles that made effective benchmarking difficult, like:

- Inconsistent tests led to inconsistencies. Benchmarking executions were often manual and managed by individual teams which made it difficult to compare results.

- Disparate testing systems wasted resources. Every technology had its own method for determining performance, resulting in a fragmented and inefficient approach.

- Benchmarking was complex, ad-hoc, and not scalable. Configuring hosts, setting up benchmarks, parsing results, and providing analysis required a full team, making the process cumbersome and unscalable.

- Benchmarking results lacked context. Results were often buried in spreadsheets, and each qualification required a custom solution, making it hard to glean meaningful insights.

- Limited portability hindered benchmarking. Benchmarking was cumbersome as the test harness lacked portability, making it difficult to launch synthetic benchmarks in various environments beyond production, such as development zones or cloud consoles.

- Many technologies had no benchmarking system. A significant number of services lacked any benchmarking tools, and therefore had no ability to assess how underlying changes would affect their performance.

Ceilometer solved these problems by providing:

- Standardized configurations. Ceilometer offers standard configurations for testing, ensuring reproducible results and allowing for “apples-to-apples” comparisons between test engineers.

- Consolidated benchmarking. It consolidates existing benchmarking implementations under one extensible platform, catering to diverse service and technology needs.

- Streamlined testing process. The Ceilometer team prioritized ease of use, unifying host identification, test orchestration, and results interpretation.

- Contextual reporting. Ceilometer’s reporting interface natively provides all important information, including server specifications, expected SLAs via a database of standard reference data, and normalization, making A/B testing seamless.

- Portable benchmarking ecosystem. Containerized testing harness enables external vendors to execute Uber’s standardized synthetics benchmarks in their own environments, then seamlessly return performance data as JSON files for integration into our analytics ecosystem. This capability expands our benchmarking reach beyond Uber’s infrastructure while maintaining data consistency and analytical insights across diverse external environments.

- Extensive coverage. Ceilometer provides benchmarking solutions for all major server components (CPU, Mem, and so on) as well as benchmarking capability for all of the major technology stacks (stateful, stateless, batch, ML), ensuring broad testing coverage.

Architecture: Under the Hood of Ceilometer

Ceilometer is engineered for robustness and scalability, designed to handle the rigorous demands of benchmarking at Uber’s scale. At its core, the framework is built on a distributed system architecture, allowing for parallel execution of diverse benchmarking tasks across various environments.

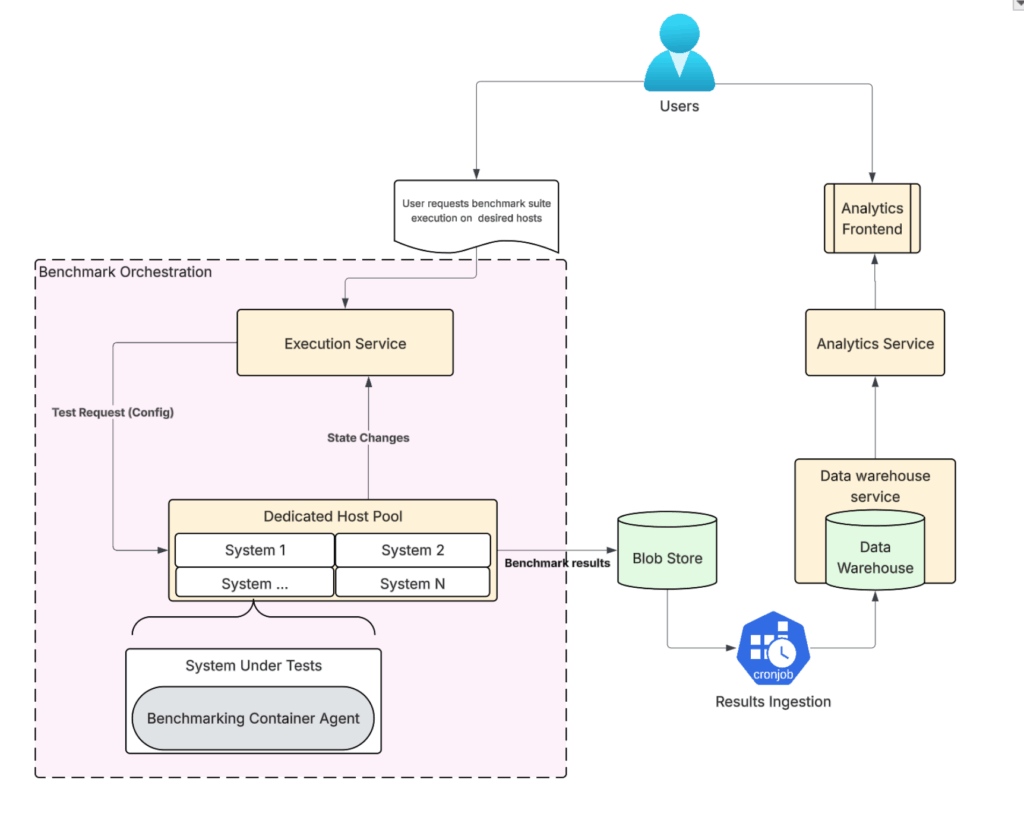

Key architectural components and data flow include:

- Benchmark orchestration. Ceilometer orchestrates tests across a fleet of dedicated benchmarking machines. This distributed approach ensures that tests can run concurrently and efficiently, mimicking real-world scenarios across our vast infrastructure.

- Blob storage. The processed results are then persistently stored in a highly available and scalable blob store. This ensures data integrity and provides a reliable foundation for historical analysis and auditing.

- Result ingestion. Once a benchmark completes, raw results are captured and fed into our dedicated processing service. This service is responsible for validating, normalizing, and enriching the data, transforming it into a structured format ready for analysis.

- Data warehouse. Performance data is ingested into Uber’s centralized Data Warehouse. This integration allows for cross-platform analysis, enabling engineers to correlate benchmarking results with other operational metrics and gain deeper insights.

- Analytics service. Performance data is then served via a dedicated analytics service. This service provides a programmatic interface for querying and visualizing benchmark results, empowering teams to quickly access and interpret critical performance insights.

Supported Testing Frameworks

Ceilometer’s flexibility extends to its ability to operate across a variety of testing frameworks, providing tailored solutions for different workload types and addressing the unique challenges each presents.

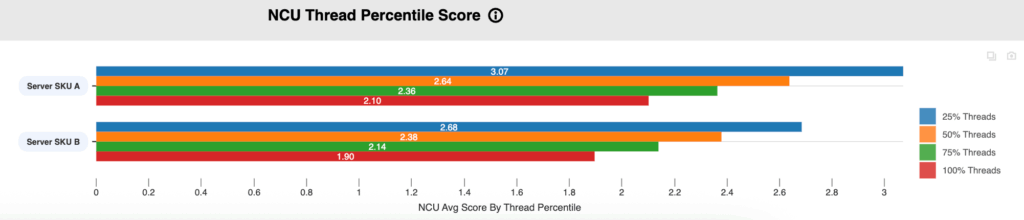

Ceilometer supports a wide array of synthetic benchmarks, which are designed to stress and measure the maximum performance levels of system components and operation types in a reproducible manner. These benchmarks encompass industry-standard tools, such as SpecCPU2017, SPECjbb2015, NetPerf and FIO among others, and are customized to be representative of Uber’s workload requirements. They provide crucial early signals regarding potential performance bottlenecks, allowing for proactive optimization and informed procurement decisions.

Ceilometer provides specialized and representative benchmarking for Uber’s stateful database technologies. These benchmarks are orchestrated by leveraging Odin (Uber’s Stateful Platform), a robust and scalable platform specifically designed for managing and optimizing stateful applications. The benchmarks are not merely for performance measurement but are intricately designed to rigorously evaluate a multitude of critical aspects, including read/write performance under varying loads, transaction throughput to assess the system’s capacity, and data consistency across replicated systems to ensure reliability. Furthermore, these evaluations are conducted under a wide array of configurable load conditions, allowing for the simulation of real-world scenarios and peak demand events. This ensures the stability and efficiency of Uber’s data backbone, a critical component of its infrastructure.

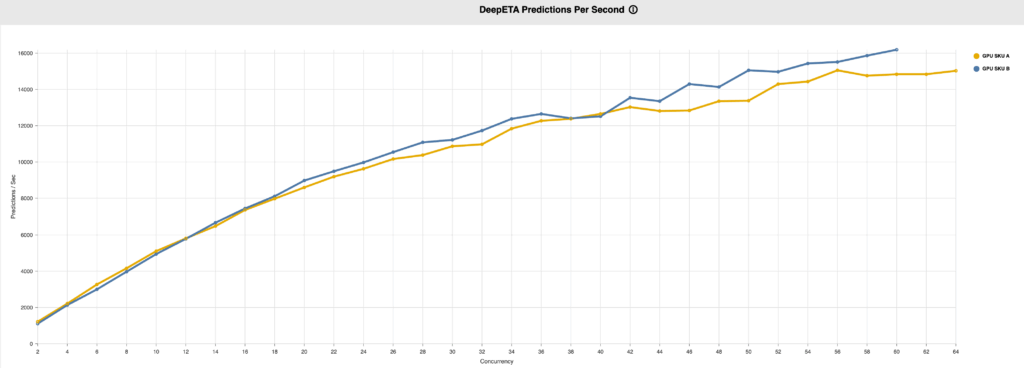

For stateless services, which form a significant part of Uber’s microservices architecture, Ceilometer provides robust testing environments to assess their performance, latency, and scalability. It leverages Ballast’s adaptive load test framework to mimic production traffic and access patterns, robustly assessing and benchmarking service performance. This helps ensure that Uber’s vast array of services can handle fluctuating demands efficiently and reliably. The ability to rigorously test these services is crucial for supporting rapid development cycles and ensuring the seamless operation of our global platform.

Applications: Where Ceilometer Makes an Impact

Ceilometer drives critical decisions and upholds high performance and reliability standards at Uber. Here’s a deeper dive into two of its most common use cases at Uber.

Shape Qualification

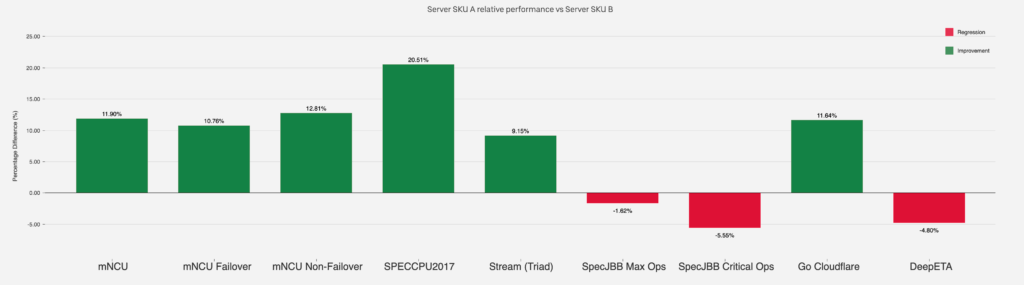

The process for qualifying new server types involves a multi-stage approach, especially when dealing with server types still under development by vendors.

Vendors execute Ceilometer’s synthetic test suite in their own environments. The results guide their design and optimization efforts, and the benchmark results are also reported to Uber. Uber’s performance engineers then analyze reported results to project performance for Uber workloads, aiding in early decisions regarding server selection and targeted optimization path.

Once the server type becomes available for testing in Uber’s environment, a more thorough suite of Ceilometer’s synthetic and application benchmarks (tailored for Uber’s stateless or stateful use cases) is executed. These results are then used to guide the selection of the most cost-effective next-generation servers by projecting and comparing results against existing production servers. They’re also used to improve the accuracy of Ceilometer’s benchmarks by updating or fine-tuning configurations where necessary.

Change Validation

Evaluating any infrastructure change to existing hardware is a process driven by executing targeted Ceilometer benchmark suites on the new configuration hardware. This typically involves collaboration with service owners and issue identification and resolution.

Effective partnerships with Uber service owners is essential, as each service is unique. This ensures that the new configuration’s results accurately reflect the service’s performance and are approved by the respective owners.

In cases where performance degradations or unexpected behaviors are observed, Uber’s performance engineers leverage Ceilometer as a powerful diagnostic tool. They work collaboratively with service owners to:

- Isolate: Pinpoint the specific component or change that introduced the regression

- Reproduce: Consistently recreate the issue in a controlled environment to understand its characteristics

- Mitigate: Implement and test solutions to resolve performance issues

This capability has been crucial for debugging and preventing regressions in Uber’s production systems.

Ceilometer has recently expanded its use case to validating external “touchless” upgrades from cloud vendors in Uber’s canary environments, ensuring high reliability of Uber’s infrastructure.

Future Work

As Uber’s infrastructure continues its rapid evolution, so too does Ceilometer. Our commitment to ensuring optimal system performance and reliability drives continuous innovation within the framework. We have several exciting areas of focus for future development:

- Enhanced AI/ML integration. We’re exploring deeper integration of AI and machine learning techniques to further automate benchmark analysis, predict potential performance regressions, and intelligently identify root causes of issues. This will enable Ceilometer to provide even more proactive insights, including the ability to predict the right resource sizing for existing workloads to optimize costs for unused resources.

- Broader ecosystem support. Our goal is to expand Ceilometer’s reach to support an even wider array of technologies and testing environments across Uber. This includes adapting to emerging infrastructure paradigms and ensuring comprehensive coverage for all critical stateful and stateless technologies within the Uber ecosystem.

- Advanced anomaly detection. Building upon our current capabilities, we aim to implement more sophisticated anomaly detection algorithms to quickly surface unexpected performance deviations, even in highly dynamic environments. This will allow for faster identification and resolution of subtle issues.

- Integration of utilization metrics. Ceilometer plans to expand the scope of metrics to include component-based utilization analytics, allowing for deeper insights into how the underlying system is performing.

- Canary continuous validation testing. Our goal is to use this benchmarking framework to schedule automated tests that will preemptively alert when a performance threshold has been tripped.

Conclusion

Designing an extensible and evolving benchmarking platform requires careful consideration of several key factors. First, a flexible and adaptable architecture is crucial to seamlessly integrate with new technologies and testing environments as infrastructure evolves. This modularity prevents significant rework and ensures the platform remains relevant. Second, robust data processing and analytics capabilities are essential to handle the sheer volume of benchmark data generated at scale. This includes efficient pipelines for ingestion, normalization, and analysis, along with accessible tools for engineers to derive meaningful insights. Finally, integrating AI/ML techniques wherever possible to automate analysis, predict performance, and identify performance anomalies ensures that the platform remains at the forefront of performance and reliability engineering while being scalable to evolving needs.

Ceilometer has successfully met Uber’s adaptive benchmarking needs, showcasing its capacity to adapt to these critical considerations. We’re excited for the future of Ceilometer as it continues to evolve and serve the cutting-edge benchmarking requirements at Uber.

Cover Photo Attribution: “New Mustang Gauges” by aresauburn™ is licensed under CC BY-SA 2.0.

NVIDIA®, the NVIDIA logo, DGX, and DGX B200 are trademarks and/or registered trademarks of NVIDIA Corporation in the U.S. and other countries.

Redis is a registered trademark of Redis Ltd. Any rights therein are reserved to Redis Ltd. Any use by Uber is for referential purposes only and does not indicate any sponsorship, endorsement or affiliation between Redis and Uber.

Stay up to date with the latest from Uber Engineering��—follow us on LinkedIn for our newest blog posts and insights.

Nav Kankani

Nav Kankani is a Senior Engineering Manager and Platform Architect on the Uber Infrastructure team. He has been working in the areas of AI/ML, hyperscale cloud platforms, storage systems, and the semiconductor industry for the past 23 years. He is also named inventor on over 21 US patents.

Nate Cloud-Rouzan

Nate is a Senior Cloud Engineer on the Uber Infrastructure team. He has also been a key contributor to the Cloud Platform Qualification team, consistently bringing together deep expertise in infrastructure mechanics, software development, and performance engineering to tackle complex challenges. A natural problem solver, Nate brings a strong #gogetit mindset and is always ready with ideas and solutions.

Derrick Tseng

Derrick is a former Cloud Engineer on the Uber Infrastructure team. He has 20 years of experience in qualification, benchmarking, and automation, with a focus on server and storage hardware.

Rajat. Sharma

Rajat is a Senior Software Engineer on the Fleet Engineering team at Uber, where he’s currently involved in the development of the Ceilometer platform. He’s a full-stack engineer specializing in distributed systems.

Posted by Nav Kankani, Nate Cloud-Rouzan, Derrick Tseng, Rajat. Sharma