Introduction

Uber processes vast amounts of data daily—across multiple verticals—using technologies like Apache Hadoop™, Apache Hive™, and Apache Spark™. Each data team at Uber must operate within resource constraints while managing ever-growing data volumes. Our team, CDS (Compliance Data Store) serves as Uber’s central repository for regulatory reporting. We share data with regulators in accordance with applicable laws and requirements.. Moreover, managing this extensive data poses significant challenges, as we rely on HDFS™ (Hadoop Distributed File System), which imposes storage quotas like NSQ (Namespace Quota) for file and directory limits and a space quota for overall storage capacity.

In 2021 our team managed 65 regulatory reports, consuming terabytes of storage. By Q2 2024, this number surged to over 500 reports majorly covering areas related to trips across a given jurisdiction, significantly increasing resource consumption. Although existing solutions could archive and retrieve data, they often risked data mutation, especially during backfills, which isn’t ideal for regulatory and audit purposes. Additionally, retrieving smaller partitions and range-based retrieval wasn’t feasible with the existing solutions, complicating efficient data access.

To address this, we implemented an archival and retrieval mechanism to efficiently store, retrieve, and manage sensitive regulatory data. The archival mechanism ensures compliance by retaining past submitted data, while the retrieval mechanism allows this data to be accessed whenever needed. This solution enables Uber, particularly the Compliance team, to optimize storage usage, prevent resource overuse, and maintain secure, well-organized data workflows.

To support our use cases, we needed the data to somehow move seamlessly from hot storage (HDFS in our case) to cold storage (cloud/object storage) while adhering to DLM (Data Lifecycle Management) policies like TTL (time-to-live), delete on archival, etc. We refer to this process as archival. Once moved from one storage to another, we also needed a mechanism to bring the data archived back to the hot storage. We call this process retrieval. The whole process needed to be automated but flexible enough to provide users with the ability to adjust the configuration at the dataset level.

By sharing our approach to optimizing HDFS storage (within teams) with archival workflows, engineers can gain insights into scalable solutions for data growth, resource limitations, and regulatory compliance. We aim to provide practical strategies that can be applied in similar high-volume data environments, offering lessons on balancing data accessibility with efficient storage use.

Challenges

Here are some of the challenges we overcame when implementing our archival and retrieval mechanism.

First, we faced schema evolution and compatibility issues. As schemas evolve due to regulatory updates, retrieving archived data can lead to conflicts if older data doesn’t align with the latest schema. We use self-descriptive formats like Parquet and schema mapping to bring back data without any conflicts.

We also had to navigate data ingestion during active backfills. Running backfill processes while archiving can cause inconsistencies, such as missing or duplicate records. To tackle this, users can dump the data into separate tables that won’t interfere with the backfill process and are independent of each other.

Our system needed to conduct efficient data retrieval without overloading hot storage. Restoring large archived datasets can cause resource spikes, slowing down analytics. Our retrieval mechanism uses lazy-loading and partition pruning that ensures only necessary partitions are restored, optimizing resource usage while keeping archived data accessible when needed.

Another requirement is to handle incremental data archival without duplication. Incremental archival risks duplicating previously archived records, leading to inefficiencies. We use an append-only storage model that prevents redundant archival, ensuring optimal storage utilization. Users also can re-write previously written data with the help of configurations.We ensure security and access control in archived data. Cold storage environments have distinct security controls compared to hot storage, increasing the need for strict access management. Existing security measures, including column-level and row-level access, ensure that only authorized users can access data on HDFS. Likewise, the cold storage environment is protected with LDAP-level access, restricting archived file access to permitted users only.

Architecture

The architecture of the archival and retrieval framework is designed to be simple yet highly effective. Currently, CDS manages over 80 datasets, amounting to terabytes of storage, all integrated into this framework. The system is driven by configuration, giving users full control over processes at the data set level. The workflows and all the utilities/tasks are implemented in Python with Terrablob for cold Storage (an abstraction over Amazon S3®). For workflows, we rely on Piper, a data orchestrator/workflow creator platform based on top of Apache Airflow™ .

Here’s an overview of the archival and retrieval architecture.

Archival

A configuration input is provided for the automation pipelines within the archival system. This configuration is also compatible with the retrieval framework, reducing code duplication.

We define two types of configurations:

- Database-level configuration: Captures essential database details such as owner groups, connection IDs, and more.

- Dataset/table-level configuration: Allows users to specify options like the dataset’s TTL, whether the framework should archive partitions without dropping them (beneficial for audit purposes), and other customizable parameters.

The core of archival automation lies in its workflows, which are essential for scheduling and executing specific tasks. These workflows support custom tasks that handle user input ingestion, data integration across various services, and the transition of data from hot storage to cold storage.

We currently use two main workflows:

- Scheduler: The workflows operate on either daily or weekly schedules, with their primary role being to log job entries into a metadata table. After processing the input, workflows categorize the datasets to be archived, arranging them in a FIFO (First-In, First-Out) sequence. Given that CDS reports follow various cadences—daily, monthly, weekly, bi-weekly, and quarterly—users have the flexibility to select the most appropriate scheduling workflow based on the frequency of data generation. This adaptability ensures efficient archival tailored to the unique cadence of each dataset.

- Archival: This workflow is specifically designed to handle the actual migration and archival process from hot storage to cold storage. It interacts with the metadata table to retrieve active entries created by the scheduling workflows. Once these entries are fetched, the workflow proceeds with archiving the corresponding data, ensuring an efficient and organized transfer to cold storage.

The metadata table, stored in MySQL®, holds essential information such as job statuses, dataset partitions for archiving, job IDs, and more. Initially populated by the scheduler workflows, this table provides the archival workflow with all necessary inputs, eliminating the need for additional processing to determine which datasets require archiving. By storing pre-processed data, the metadata table streamlines the archival process, enabling a smoother and more efficient workflow. Figure 4 shows a few necessary columns related to metadata.

Retrieval

We developed the YAML configuration so that both archival and retrieval mechanisms can benefit from it. But, unlike the archival workflow that’s scheduled to run on different cadences, the retrieval workflow is triggered only when data is required to be brought back from cold storage to hot storage. This is achieved with a trigger-based approach through Piper. The retrieval workflow can handle multiple requests simultaneously as well as bringing a range of partitions at once that need not be contiguous. The major tasks of this workflow include:

- Retrieval: This task is responsible for getting the required number of partitions (provided by users) from the cold storage to hot storage. This also uses batching coupled with multi-part downloads for faster data retrieval.

- Table creation: Once the data files are in place and confidence checks have been completed, this task extracts the schema of the files. In case of schema conflicts, it keeps track of files with similar schemas and bundles them together to form different datasets.

For auditability and transparency purpose, we do log the requests and responses in a separate team-specific MySQL table.

We leverage widely adopted technologies to ensure the system’s stability, efficiency, and scalability. MySQL serves as the metadata store for managing archival job details, enabling quick lookups and seamless coordination. HDFS acts as the hot storage, housing all compliance data that requires high-throughput access, while Terrablob, an abstraction of S3, serves as the cold storage, optimizing costs and ensuring long-term durability of archived data.

For orchestration, Piper, an abstraction of Apache Airflow, manages scheduled and trigger-based workflows, ensuring reliable and automated execution of the core archival and retrieval processes. Together, these technologies form a cohesive ecosystem that enables efficient data management, cost optimization, and automation. This architecture ensures that data remains readily accessible when needed, while balancing performance and storage costs to handle the ever-growing scale of compliance data seamlessly.

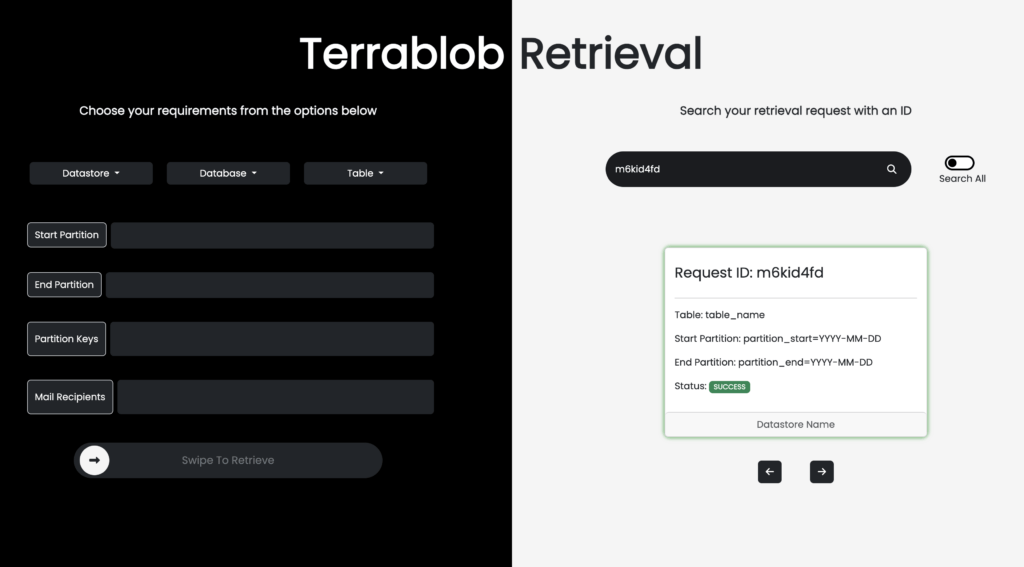

UI/Design

The user interface is designed to be clean and intuitive, eliminating the need for manual input. All fields are dropdown menus that dynamically fetch the required data from the configuration whenever the datastore or table name is updated. This approach minimizes manual errors, simplifies data validation, and ensures a smoother, more reliable user experience. We employ dynamic authentication using LDAP verification, ensuring that only authorized users can access and use the interface.

Previously, there was no user interface for this process. Inputs were manually typed and passed as Piper variables, a method used for data communication and sharing within workflows. This is the first time a UI has been incorporated into the retrieval workflow, streamlining the process and enhancing user interaction.

The user interface eliminates the tedious task of manual data and field entry. With its introduction, manual errors are virtually eliminated, as the UI handles inputs more accurately. Real-time updates allow users to track their requests without manually monitoring the workflow. Lastly, being a self-serve application, accessibility improves, enabling non-technical users unfamiliar with configurations to easily create requests without reaching out to the Engineering team. This frees up engineering bandwidth for higher-priority tasks.

Use Cases at Uber

An archival and retrieval framework like this one has a wide range of use cases and offers numerous advantages in managing data efficiently. A few major use cases are:

- Compliance and regulatory reporting: For teams required to store data for compliance, the framework allows efficient long-term storage of regulatory data. It archives older data to cold storage, ensuring accessibility only when required, which is essential for audits and regulatory checks.

- Cost-effective storage management: By moving infrequently accessed data to cheaper cold storage, we save on high-cost hot storage resources, optimizing overall storage expenses.

- Data lifecycle management: We often generate large amounts of transient data that’s important for a limited time. This framework allows temporary data to be moved to cold storage once it’s no longer actively used, freeing up resources without permanent deletion.

- Disaster recovery and backup: The archival framework can store backups of critical datasets, providing a safe, organized means to retrieve data for disaster recovery or business continuity in case of primary storage failure.

- Data security: With existing security measures such as column-level access and row-level access, authorized users can already access data stored on HDFS. Similarly, the cold storage environment is secured with LDAP-level access, ensuring that only permitted users can access archived files.

When this technology was developed, the focus was on creating a touchless experience. The archival framework follows a set and forget approach, requiring minimal manual intervention. To date, it has successfully archived terabytes of data and continues to perform reliably.

In contrast, the retrieval mechanism, equipped with a user-friendly interface, removes the need for manual configuration during data retrieval. With just a few clicks, users can register a retrieval request, and the system provides immediate status updates. Previously, manual intervention in the retrieval process could take days. Now, with this streamlined solution, retrieval time has been reduced by nearly 90%.

Next Steps

Upcoming enhancements to the archival framework aim to broaden support for additional cold storage options. Currently, we rely primarily on Terrablob, but exploring more cost-effective alternatives is on the horizon. Another planned feature is custom range selection, allowing users to specify a range of partitions for prioritized archival, potentially extending to priority-based archiving.

For the retrieval mechanism, improvements in schema conflict handling are planned to make the process more resilient. Expanding retrieval capabilities to include multiple storage sources (as long as they follow the same partitioning strategy) is also under consideration. Additionally, providing users the flexibility to dump retrieved data into a dataset of their choice will enhance control and usability in managing data after retrieval. Also, retrieval of data that users are authorized to access can be an added bonus.

Conclusion

In this blog, we explored the Compliance Data Store team’s journey in building a robust archival and retrieval framework to tackle the ever-growing challenges of data storage, compliance, and accessibility. From a set and forget archival strategy to an intuitive, on-demand retrieval interface, we discussed how this framework empowers teams to manage large volumes of data effectively while reducing costs and manual effort.

The framework’s configuration-driven approach allows for seamless automation, minimizing human error and improving data validation. Upcoming enhancements—such as support for additional cold storage options, priority-based archiving, and advanced schema conflict handling—promise even greater flexibility, scalability, and efficiency.

By streamlining complex workflows and making data management accessible even to non-technical users, this solution exemplifies Uber’s commitment to innovative and sustainable data practices. We look forward to evolving this framework further to meet the dynamic needs of Uber’s global operations.

Cover Photo Attribution: “The line up – Storage tracks.” – By Loco Steve (Chosen from Openverse)

Amazon Web Services®, AWS®, Amazon S3®, and the Powered by AWS logo are trademarks of Amazon.com, Inc. or its affiliates.

Apache®, Apache Hadoop™, Apache Hive™ , Apache Spark ™, HDFS™, Apache Airflow™ are either registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries. No endorsement by The Apache Software Foundation is implied by the use of these marks.

MySQL is a registered trademark of Oracle® and/or its affiliates.

Stay up to date with the latest from Uber Engineering—follow us on LinkedIn for our newest blog posts and insights.

Abhishek Dobliyal

Abhishek Dobliyal is a Software Engineer at Uber working with the Compliance Data Store team looking after the automation and generation of regulatory data.

Aakash Bhardwaj

Aakash Bhardwaj is a Senior Software Engineer with 8+ years of industry experience, spanning Data Platforms, Big Data Solutions, and Feature Engineering. Aakash has been working at Uber for the last 5 years developing Bedrock systems like Compliance Data Store, Self Server Reporting via IRIS, and supporting the Uber Safety Marketplace.

Posted by Abhishek Dobliyal, Aakash Bhardwaj