Introduction

Uber has been on a multi-year journey to reimagine our infrastructure stack for a hybrid, multi-cloud world. The internal code name for this project is Crane. In this post we’ll examine the original motivation behind Crane, requirements we needed to satisfy, and some key features of our implementation. Finally, we’ll wrap up with some forward-looking views for Uber’s infrastructure.

In the Beginning…

In 2018 Uber was facing 3 major challenges with respect to our infrastructure:

- The size of our server fleet was growing rapidly, and our tooling and teams weren’t able to keep up. Many operations for managing servers were still manual. The automated tooling we did have was constantly breaking down. Both the manual operations and automated tooling were frequent outage culprits. In addition, operational load was taking a severe toll on teams, which meant less time for them to work on fundamental software fixes, leading to a vicious cycle.

- Fleet size growth came with the need to expand into more data centers/availability zones. What little tooling existed for turning up new zones was ad hoc, with the vast majority of the work being manual and diffused across many different infrastructure teams. Turning up a new zone took multiple months across dozens of teams and hundreds of engineers. In addition, circular dependencies between infrastructure components often led to awkward bootstrapping problems that were difficult to solve.

- Our server fleet consisted mostly of on-prem machines, with limited ability to take advantage of additional capacity that was available in the cloud. We had a single, fledgling cloud zone but manual operations implied that we were not really taking full advantage of the cloud.

We decided to take a step back and reimagine what our ideal infrastructure stack would look like:

- Our stack should work equally well across cloud and on-prem environments.

- Our cloud infrastructure should work regardless of cloud provider, allowing us to leverage cloud server capacity across multiple providers.

- Migration of capacity between cloud providers should be trivial and managed centrally.

- Our tooling should evolve to support a fleet of 100,000+ servers, including:

- Homogeneity of hosts at the base OS layer, allowing us to centrally manage the operating system running on every host. All workloads should be containerized with no direct dependencies on the host operating system.

- Servers should be treated like cattle, not pets. We should be able to handle server failures gracefully and completely automatically.

- The detection and remediation of bad hardware should be completely automated. A single server failing should not lead to an outage or even an alert.

- A high-quality host catalog should give us highly accurate information about every host in our fleet¹.

- Any changes to the infrastructure stack should be aware of various failure domains (server/rack/zone), and be rolled out safely.

- Zone turn-up should be automated. Our current stack required dozens of engineers more than 6 months to turn up a new zone. We wanted one engineer to be able to turn up a zone in roughly a week.

- Circular dependencies among infrastructure components led to their own set of operational and turn-up challenges. These needed to be eliminated.

With these requirements in hand, we set out on a multi-year journey to modernize and dramatically scale up Uber’s infrastructure stack. In the upcoming sections, we’ll talk about some of the key features of this new stack, as well as lessons we’ve learned along the way.

Zone Turn-Up

We decided to start our journey by solving the zone turn-up problem first. Anything that would be needed in steady-state zone operation would need to be bootstrapped at zone turn-up time, so by solving this problem first we could be reasonably sure that we also solved any issues our new stack might have in steady state.

The big driver of most of our decisions was the need to remove circular dependencies between infrastructure components. For example, in our legacy stack, the software for provisioning servers ran on our Compute clusters. But our Compute clusters obviously weren’t turned on within a zone at turn-up time because we had yet to provision any machines.

We’d previously developed a framework for thinking about service dependencies called “layers.” Broadly speaking, services within one layer may only take dependencies on services at lower layers². By adhering to this principle, one can ensure that a service dependency graph is a DAG and absent of cycles. We therefore leveraged this framework as we developed all of the new services we would use to bring up a zone.

Figure 1: Layers framework

At the very bottom of our DAG is layer zero. This is the absolute base level of dependencies needed to turn up a new zone. These dependencies, including our catalog of all known hosts, actually exist outside of any Uber operated zone. This ensures that we can turn up new zones without access to existing Uber infrastructure, something that’s useful when building out zones in foreign environments or, in the event of a severe outage, the complete lack of any functional Uber infrastructure.

To actually dictate how a zone is turned up, we developed our own Infrastructure-as-Code (IaC) components, mostly built on top of Starlark configuration and Go code. In order to facilitate minimal dependencies needed during turn up, the entire suite of IaC can be run off of an engineer’s laptop. Then once the zone is turned up, automated execution of the IaC is cut over to the services running in the zone itself.

All together, our IaC code and layer-zero dependencies are now all we need to turn up a new zone. We’ve turned up 5 production zones using these tools (with 4 more on the way), and our current record time for bootstrapping zonal infrastructure is 3 days. Today, developers often test changes to our IaC code by just spinning up a complete test zone in the cloud. This normally only takes an hour or so.

We like to think of our zones now as self-assembling, much like a Crane.

Steady State

Once a zone has been bootstrapped using the process above, a number of components work to continually ingest new servers, allocate existing servers, and generally maintain overall zone health.

Provisioning and Reclaiming

The first step for any new host in a zone is provisioning. In our legacy stack, individual teams were responsible for installing operating systems and requisite software on whatever hosts they owned. We wanted to move away from this model for 2 reasons. First, host provisioning (particularly for on-prem hardware) is a complicated and error-prone process. Having many teams understand the ins and outs of this process wasn’t scalable. Second, we wanted to centrally control what software was on our servers (more on this later).

For cloud hosts, this process is relatively straightforward. We built an abstraction layer over various cloud providers’ APIs and the process for provisioning a new host is simply a matter of calling into this abstraction layer as well as inserting a record for the host in our host catalog. Every cloud VM created is given an identical image. Once we detect the VM is up and running, we move it to an available pool where it is eligible to be handed out to various teams. Similarly, once we are done using a host, we use our abstraction layer to shut down the VM and remove the record from our host catalog.

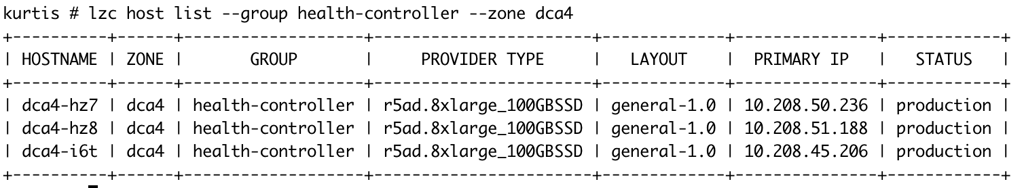

Figure 2: A sample of hosts from our host catalog

The story is much more complicated for our on-prem hardware. For a physical server, we first insert a record into our host catalog based on information from our physical asset tracker. Then, utilizing an in-house DHCP server that can respond to NetBoot (PXE) requests and some software to help us ensure that a host will always first attempt to boot from the network, we image the machine. The image we stamp on the boot drive of the server is almost identical to the one we use for our cloud VMs. After some light disk setup, we reboot the host. If we detect that provisioning has completed successfully, the host is moved to an available pool where it is eligible to be handed out to various teams.

Reclaiming a host is also more complicated on-prem. When a server is returned to us, we can’t just shut off a VM like we do in the cloud. We actually send the host back into our provisioning system. This helps us wipe the hard drive and “recycle” the server for future use by a different team. Once a server has reached the end of its life span, we then have a separate flow for preparing the server for full decommissioning. Only at this point of decommission do we actually remove the record of the host from our host catalog.

Once provisioning is complete, both cloud and on-prem hosts look essentially identical. They’re running the same operating system and their records in our host catalog have identical schemas. With the exception of our provisioning and reclamation software, the rest of our stack (and every platform above us) is completely agnostic to whether or not it’s running on-prem or in the cloud. And, if in the cloud, our software is agnostic to the cloud provider. Most software just operates on the consistent view of the fleet provided by our host catalog. It’s hard to overstate the flexibility and power this gives us.

Assignment

Figure 3: High-level life cycle as a host. On-prem and cloud specific flows are abstracted away.

Hosts/VMs sitting in our available pool are handed out to various teams on the basis of “Credits.” Credits are a promise of resources to a specific team in a specific zone, with our unit of resource being a single server of a particular hardware configuration.

Our assignment system periodically scans our host catalog for unfulfilled credits. When our system sees an unfulfilled credit, the system compares the resource requirements of the credits and requirements of the team to the available hosts. When evaluating what host to assign, our system considers several factors in addition to credits:

- Failure domain diversity: We will attempt to give teams hardware that is located in a different failure domain (e.g., a server rack) than where their current hosts are. We also allow teams to specify minimum diversity requirements (i.e., “never put more than 5 of my hosts in the same rack”).

- Disk configuration: All hosts are pre-provisioned, including the partitioning and file systems used for their disks. We ensure that teams get hosts with a disk configuration matching what they have specified in their config.

Assignment is done by changing the server’s “group” in our host catalog, allowing anyone to easily see which hosts are being used for what purpose. Once a host is assigned to a group, the software that a team has specified to run on their hosts will automatically start. This allows teams to quickly increase capacity without manual intervention.

Host Homogeneity

Previously, teams had wide latitude to customize the operating systems on subsets of hosts to their individual needs. This led to fragmentation, making it complex and difficult to perform fleetwide operations like OS, kernel, and security updates, porting the infrastructure to new environments, and to troubleshoot production issues.

In response, we pushed all team-specific workloads into containers, and enforced that every host is identical at the OS layer, containing only the essentials: a container runtime and general identity and observability services.

Unlike a strict “immutable infrastructure” approach, we are able to update the OS image on running hosts in place and avoid requiring mass reboots for every update, using Dominator. Additionally, it enforces that any changes which cause a host to deviate from the expected state are immediately reverted (i.e., any files or packages installed that are not part of our golden OS image are quickly deleted/removed). It accomplishes this by communicating with an agent, subd, that is present on every host. Dominator runs across all zones and across all providers.

As a result, we are much better positioned to perform centralized operations like upgrades or ports to new environments without having to address many unique host configurations individually.

Bad Host Detection and Remediation

Figure 5: The process for detecting a bad host

One of the largest problems with our legacy stack was the detection and remediation of “bad hosts” (i.e., hosts with some sort of hardware problem). This was a problem that every team using dedicated hardware was essentially left to solve on their own, leading to:

- General confusion: What constitutes bad hardware is somewhat subjective. Which SEL events are truly important? What SMART counters (and thresholds for those counters) are most appropriate for monitoring disk health? Does out-of-date firmware count as a hardware problem? In a multi cloud world, there are also host health events provided by each cloud vendor. How do we react to these in a holistic manner?

- Duplicate effort: Because every team was responsible for their own hardware, we often ended up solving the same problems over and over again.

In Crane, we centralized our definitions of what constitutes bad hardware and captured these definitions in the actual bad hardware detection code itself. All of this detection code was then placed in a single service, aptly named Bad Host Detector (BHD).

We run an instance of BHD for each zone. Periodically, BHD gets a list of all hosts in its zone from our host catalog and scans them for problems. When it finds a problem, it records it in a central database. Another service, Host Activity Manager, takes action on these issues by coordinating with the appropriate workload scheduler (determined by the host’s group) to drain workloads and reclaim the host. In the cloud, we simply stop the VM at this point. For on-prem, the host is directed to a special repair queue where any necessary fixes are made before heading back out to production.

In addition to scanning for hardware problems ourselves, for cloud zones we also periodically ingest host health events provided by our cloud providers. These events are treated like any other hardware issue, using the exact same remediation flow described above.

One advantage of recording all detected hardware problems is that we can run queries on our problem database. This allows us to observe historical trends across multiple dimensions (e.g., zone or hardware type). We can also do real-time monitoring to catch hosts that are continuously “cycling” in and out of production, something that could indicate a deeper issue for which we’re missing detection.

Config and Change Management

The Crane stack is highly config driven (i.e., Infrastructure-as-Code). This means we require a robust config solution that allows us to express our intent rather than having to write the exact config that is used by our automation. In other words, instead of writing:

We prefer to write:

Figure 7: Desirable Cluster Configuration

The second form has several benefits:

- The developer is saved from writing what is essentially the same config 4 times

- Schema uniformity of config is enforced

- Copy and paste errors are prevented

- It’s clearly obvious what is different between each cluster and what is the same

We can use this higher-level form to generate/materialize the YAML above, which is what we then actually distribute to our infrastructure.

We evaluated both Jsonnet and Starlark for this purpose and decided on Starlark, which is what we use in our above example. In addition to general config generation, we needed a way to make controlled, safe changes to our infrastructure. With the power of Starlark in hand, we’ve developed what we call rollout files, which allow us to generate/materialize configs per zone³.

In the above example, the getAgentVersion function will generate different values depending on the provided zone and what zones are present in the rollout.yaml file. For example, the config generated for the zone “phx4” will have the latest version. But the config generated for “dca1”, will have the stable version. In this way, we can gradually change our generated/materialized config (i.e., the value for the agent version) across all zones by adding new zones to our rollout.yaml file, one at a time. Rollbacks are also quite simple: to roll back our agent version for a zone, just remove it from the list in the rollout.yaml file.

In our example above, all of this behavior is abstracted behind the getAgentVersion function, so any part of our config that needs this agent version can just use this function and know they’ll get the safe/correct, generated values. One can imagine how this logic generalizes to be used for arbitrary config values.

All of our config is stored in source control. By leveraging automation that adds new zones to our rollout files, one commit at a time, we can safely roll out config changes. We call this automation Low Level Config (LLC). It allows us to essentially use feature flags, but with a very minimal set of dependencies. Note that our rollout file is a raw YAML, not Starlark. Our rollout files are the one part of our config that doesn’t use Starlark. We do this because rollout information needs to be directly modifiable by automation and it’s simple enough for humans to read.

Our actual implementation of LLC is slightly more complicated and more ergonomic than the example above, but the general principals are the same. With configurable wait times between zone additions and customizable metrics monitoring for triggering automatic rollbacks, we now run hundreds of critical config changes across dozens of teams every week.

¹ This was a major pain point of our legacy system. Our previous host catalog was often inaccurate, causing us to make many incorrect decisions about how any given server should be handled.

² We also allow the exceptional case of component A taking a dependency on component B when they’re at the same layer, as long as B does not have a dependency on A.

³At our lowest levels of infrastructure, we consider a zone to be a unit of deployment. It’s an acceptable blast-radius for us. In other words, we’re ok with a bad change taking down a single zone.

Where We Go from Here

We’re currently wrapping up our transition from our old legacy stack to Crane. As we do so, we’ve started engaging on some of the new projects it unlocks such as:

- Expanding our cloud abstraction layer to support more cloud providers

- Dramatically improving the types of signals we can use to detect bad hardware

- Detecting bad network devices in addition to bad servers

- Supporting new CPU architectures (e.g., arm64)

- Executing centralized Kernel and OS distro upgrades

- Taking advantage of elastic cloud capacity

Crane has arguably been one of the largest engineering endeavors in which Uber has ever engaged. It represents a large cultural shift, from an organization defined by bespoke, manual toil to one defined by heavy use of standardized automation. It required collaboration from many teams. With the new, solid foundation provided by Crane, we look forward to continuing to collaborate and iterate with everyone in Uber infrastructure on these improvements and more.

Kurtis Nusbaum

Kurtis Nusbaum is a Senior Staff Engineer on the Uber Infrastructure team in Seattle. He works on highly-reliable distributed systems for managing Uber’s server fleet.

Tim Miller

Tim Miller is a Software Engineer on the Uber Infrastructure team in Seattle, focused on the operating system, kernel, security and upgrades of Uber's production hosts.

Brandon Bercovich

Brandon Bercovich is a Software Engineer on the Uber Infrastructure team in San Francisco. He works on the capacity management systems for allocating servers across Uber’s server fleet.

Bharath Siravara

Bharath Siravara is an Engineering Director on the Uber Infrastructure team in Seattle. He supports several teams including server fleet management and Uber’s Compute Platform.

Publicado por Kurtis Nusbaum, Tim Miller, Brandon Bercovich, Bharath Siravara

Produtos

Empresa