From Monitoring to Observability: Our Ultra-Marathon to a Cloud-Native Platform

6 January / Global

Introduction

Managing a global corporate network at Uber’s scale can feel a bit like running an ultra-marathon. There are long stretches of smooth sailing, but you’re always preparing for the unexpected mountain pass or sudden change in weather. For years, our engineering teams have navigated this terrain with a traditional, monolithic monitoring system. Frankly, it felt like running in heavy hiking boots—sturdy, but slow, inflexible, and exhausting to scale up any hill.

We knew we needed to switch to a modern pair of carbon-fiber running shoes. This meant a complete overhaul: a journey to replace our legacy system with a cloud-native observability platform built for speed, flexibility, and endurance on an open-source stack.

Training Plan: Scope and Vision

Before diving deeper, it’s important to clarify where this system operates and what we wanted to achieve.

The Scope: Uber’s Corporate Network

The CorpNet Observability Platform focuses exclusively on Uber’s corporate network—the infrastructure that connects offices, data centers, cloud environments, and internal services.

It’s not a production telemetry platform; instead, it monitors and analyzes:

- Network and infrastructure devices like switches, routers, PDUs, and IoT sensors

- Connectivity, latency, and device health across Uber’s internal regions

- Operational data flows supporting enterprise networking and internal applications

The mission is simple: make Uber’s internal network as observable, reliable, and automated as the systems it supports.

The Vision: Upgrading Our Gear with Open Source

Our vision was to build a new system on the pillars of data quality, scalability, and actionable data. We chose a foundation of best-in-class open-source tools.

| Function | Technology | Why It Matters |

| Metrics Collection | Telegraf™ | A lightweight, agile runner—able to handle diverse terrains (SNMP, API, and MQTT) without breaking stride. |

| Metrics Storage | Prometheus® and Thanos™ | Prometheus handles the quick climbs—real-time metrics—while Thanos ensures endurance with long-term storage and global reach. |

| Visualization | Grafana® and Kibana® | The panoramic viewpoints along the route—giving clarity and context when the trail gets steep. |

| Metadata and Search | Elasticsearch® | The trail map and compass—indexing every step, making it easy to retrace paths or spot patterns. |

A Cloud-Native Architecture Built for the Long Run

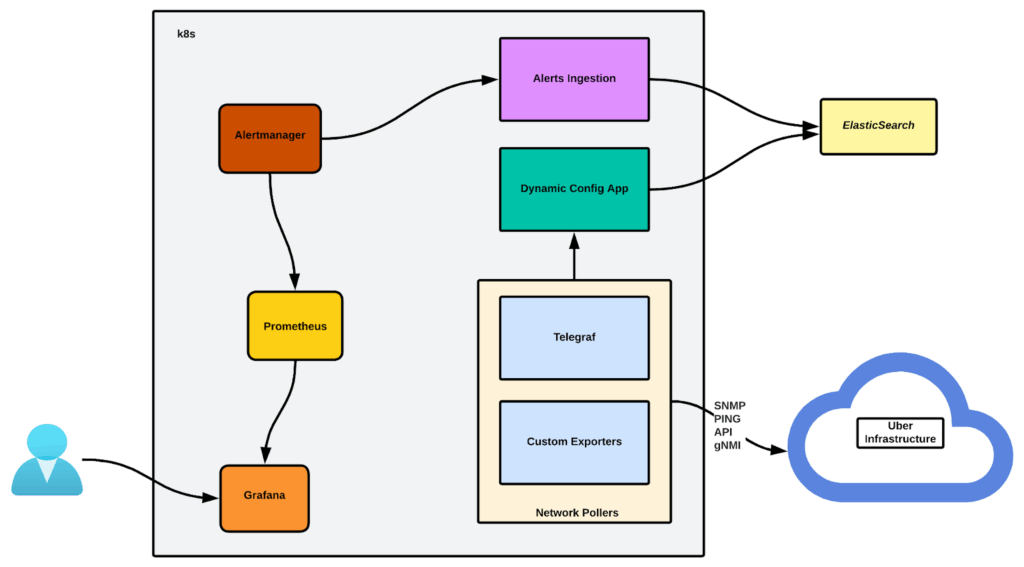

To achieve our vision, we adopted a cloud-native, microservices-based architecture deployed on Kubernetes®. Each component is a modular, containerized service that communicates via APIs, making the system open to new integrations.

The system is deployed globally across regions (USC, EMEA, and APAC). This is like having well-stocked aid stations along the race course, ensuring our monitoring probes provide accurate, low-latency measurements by being geographically close to our devices.

Running on Kubernetes also gives the system an incredible ability to bounce back. If a component stumbles, it’s automatically restarted, ensuring high availability.

Looking at other components of the architecture, we use a scalable, open-source stack. We use Prometheus for core time-series metrics, extended by Thanos for long-term storage and a global query view. Telegraf is our versatile data collection agent, while Grafana and Kibana provide unified dashboards for metrics and alerts.

Finding the Rhythm on the Mountain: The Architecture Behind the Run

Our observability platform is built like a trail race—every component works in sync to keep momentum on unpredictable terrain.

Keeping Up the Pace: Dynamically Tracking Network Changes

In trail running, staying still means falling behind. The terrain changes under your feet—the weather, the altitude, the fatigue—and success depends on how fast you adapt.

Our observability system faces a similar challenge. Without adaptability, it’d be like running the same loop forever—predictable, static, and quickly outdated.

That’s why we introduced the Dynamic Config App, a service that keeps the entire monitoring system moving in rhythm with Uber’s corporate network.

Before this, Telegraf pollers relied on static configuration files—great for a short sprint, but not for a long, evolving route. Every time a new device appeared in the inventory or a router changed region, or a device was set for the maintenance state. It was functional, but it couldn’t scale or keep pace with a live, global environment.

The Dynamic Config App changed that completely.

This service dynamically tracks updates in the inventory stored in Elasticsearch, which is continuously refreshed from ServiceNow® (the source of truth). Whenever a device is added, removed, or reassigned, the Config App immediately reflects that change in Telegraf’s configuration—no manual edits, no redeploys.

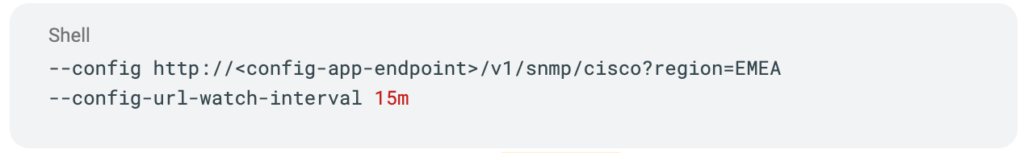

Each Telegraf agent is launched with parameters that make it self-adjusting:

Using parameters like /v1/snmp/cisco?region=EMEA, the app filters which devices each Telegraf agent should poll based on region, site, or device type.

With this setup, Telegraf periodically checks the configuration URL to see if anything has changed. If the Last-Modified timestamp differs, it automatically reloads its configuration.

This mechanism allows us to:

- Instantly redistribute polling workloads across regions (USC, EMEA, and APAC)

- Filter configurations by tags such as region, site, or device type

- Roll out new collectors or plugin changes globally, without redeploying agents

- Maintain full alignment with the live network inventory at all times

The result? A system that never stands still.

Just as a good ultrarunner adapts to every climb and descent, our observability platform adapts to every change in the corporate network—automatically, continuously, and effortlessly.

Finding the Trail Beyond the Map: From Monitoring to Observability

Monitoring answers “Is it up?”

Observability answers “Why does it feel slow uphill?”

We transitioned from static metrics to context-rich observability, powered by automation and smart data flow.

In trail running, checkpoints tell you where you’ve been, but not how you’re doing. They confirm you’re still on the course—but they don’t explain why your pace has dropped, where the next climb begins, or what’s happening behind the next ridge.

Traditional monitoring systems are like those checkpoints. They tell you if something’s up or down, but not why or what’s next. Observability, on the other hand, is about understanding the entire race—the terrain, the conditions, and how every part of the system moves together.

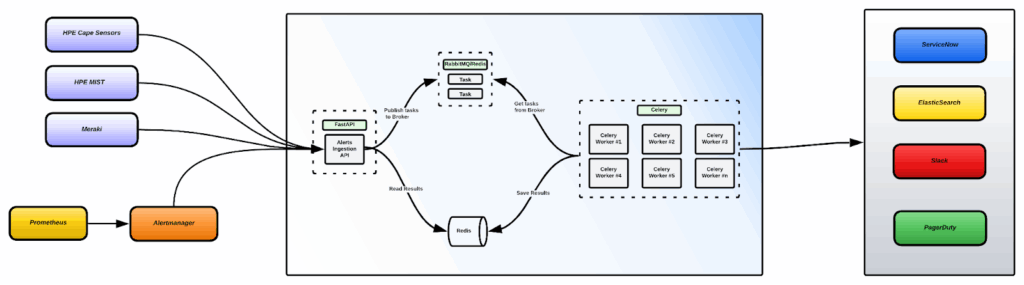

That shift—from monitoring to observability—is where the Alert Ingestion App comes into play.

If Telegraf and the Dynamic Config App represent the legs and lungs of our system, the Alert Ingestion App is its coach and race strategist.

Built on FastAPI™, Celery™, and Redis®, this service listens to every signal along the route—not just from Prometheus alerts, but also from third-party systems like Meraki®, HPE MIST™, and other webhook integrations.

It ingests alerts asynchronously, processes them in parallel, and ensures that critical events reach the right teams without flooding Slack® or PagerDuty® with noise.

Think of it as an intelligent checkpoint system:

- It filters and prioritizes alerts, focusing only on what matters most

- It groups similar events, like multiple runners crossing the same timing mat together—reducing alert storms

- It adds context and correlation, so engineers can see why an issue occurred, not just that it did

- And it updates in real time, posting alerts once and editing them as their state changes—no duplicate noise, no confusion

By routing every alert—from Prometheus, MQTT (Message Queuing Telemetry Transport) sensors, or network APIs—into a single Elasticsearch index, the Alert Ingestion App creates one continuous trail of operational awareness. Engineers can retrace the full journey of an incident, from its first ping to its full resolution, across all systems and regions.

Together with the Dynamic Config App, it transforms a static monitoring setup into a living, breathing observability ecosystem—one that adapts to the terrain, listens to the data, and keeps perfect pace with the ever-changing rhythm of Uber’s corporate network.

Paving the Way for a Personal Best: AIOps and Faster Resolutions

Centralizing all our alert data—both firing and resolved—creates a rich, historical dataset. This is like a detailed training log of every run we’ve ever done, and it’s the perfect fuel for AIOps (AI for IT Operations). This historical context allows us to bring in an elite performance coach.

An AI engine processes all alert sources to provide advanced insights into network behavior patterns. By analyzing past performance, it helps engineers pinpoint root causes faster, significantly lowering our MTTR (Mean Time To Repair) and helping us set a new personal best for incident resolution.

This architecture also supports interactive AI agents, like a Slack bot, that act as a pacer during an incident. Engineers can ask questions in natural language (“How have alerts for this site code trended over the last 24 hours?“) and get immediate, context-rich answers, helping them push through the toughest parts of an incident.

Conclusion: The Finish Line

By migrating to this modern, cloud-native observability platform, we’ve changed our shoes and our entire approach to running the network marathon. We now have:

- Faster finish times. With enriched data and intelligent, de-duplicated alerts, engineers can diagnose and resolve issues much faster.

- Readiness for any terrain. The modular, API-driven design makes the system highly extensible and always open to new integrations, ensuring we’re ready for whatever the course throws at us next.

- Smarter pacing. We’ve eliminated hundreds of thousands of dollars of recurring licensing fees, allowing us to invest those resources in more innovative parts of our strategy.

This project has been a rewarding endurance event. The result is a platform that empowers our teams with the deep insights needed to manage a network at Uber’s scale and paves the way for a future of even smarter, AI-driven network operations

Cover Photo Attribution: “HOKA UTMB Mont-Blanc 2024 – PTL, CCC, UTMB” by Sportograf, is from the author’s personal gallery.

Celery is an open-source distributed task queue licensed under the BSD License.

FastAPI™ is a trademark of @tiangolo and is registered in the US and across other regions.

Kibana® and Elasticsearch® are registered trademarks of Elasticsearch BV, registered in the U.S. and in other countries.. No endorsement by Elasticsearch is implied by the use of these marks.

Kubernetes®, Thanos™, Prometheus® are trademarks or registered trademarks of The Linux Foundation® in the United States and other countries. No endorsement by The Linux Foundation is implied by the use of these marks.

Meraki® is a registered trademark of Cisco Technology, Inc.

MIST™ is a trademark of HPE.

PagerDuty® is a registered trademark of PagerDuty, Inc.

Redis is a registered trademark of Redis Ltd. Any rights therein are reserved to Redis Ltd. Any use by Uber is for referential purposes only and does not indicate any sponsorship, endorsement or affiliation between Redis and Uber.

ServiceNow® is a registered trademark of ServiceNow, Inc.

Slack® is a registered trademark and service mark of Slack Technologies, Inc.

Telegraf™ is a trademark of InfluxData, which is not affiliated with, and does not endorse, this blog.

Razvan Cicu

Razvan is a Senior Infrastructure Engineer with Uber, based in Amsterdam. He specializes in network automation and monitoring.

Giovanni Pepe

Giovanni Pepe is a Staff Infrastructure Engineer, TLM and head of the corporate network infrastructure at Uber.

Posted by Razvan Cicu, Giovanni Pepe