Introduction

ZFR (zone failure resilience) is a critical aspect of modern distributed systems, especially for real-time analytics platforms like Apache Pinot™ that power many Tier-0 use cases at Uber. As part of our regional resilience initiative, ensuring Pinot can withstand zone failures without impacting queries or ingestion is paramount. This blog details how we’ve achieved zone failure resilience in Pinot by leveraging its instance assignment capabilities, integrating with Uber’s in-house isolation group concept, and consequently accelerating our release processes.

Leveraging Pinot’s Instance Assignment for Cross-Zone Data Distribution

Initially, our Pinot clusters at Uber relied on two key strategies: tag-based instance assignment, which groups servers by tenant to ensure logical isolation, and balanced segment assignment, which spreads data segments evenly across those servers. While effective for distributing data evenly across servers within a tenant, this approach didn’t inherently guarantee distribution across different physical zones. If all instances assigned to a table, or all replicas of a segment, happened to be in a single zone, a failure in that zone would lead to significant service disruption.

To overcome this, we adopted a more sophisticated approach: pool-based instance assignment in conjunction with replica-group segment assignment.

Pool-based instance assignment allows us to organize servers into distinct pools. This strategy is primarily designed to accelerate no-downtime rolling restarts for large shared clusters by ensuring different replica groups are assigned to different server pools.

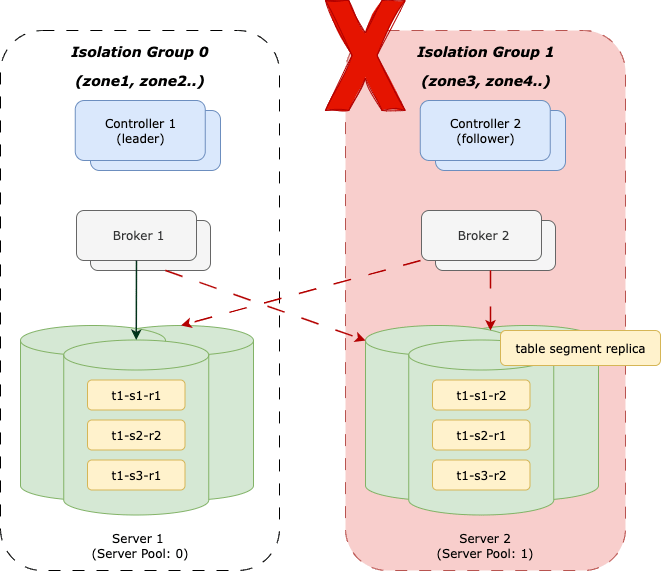

When combined with replica-group segment assignment, replicas of data segments are distributed across these defined pools (which correspond to zones). This means that even if one pool of servers experiences a failure, the serving of replicas remains unaffected because other replica groups in different pools are still online. For example, if a table has three replicas and two are down, the remaining replica can continue to handle write and read traffic.

Integrating with Uber’s Isolation Groups to Abstract Zones

The key to making our Pinot deployments truly zone-resilient lies in integrating with Uber’s in-house infrastructure concept: the virtual resource isolation group. We leverage this concept by using the isolation group ID as the pool number for each Pinot server instance.

Isolation Groups

An isolation group provides an abstraction that makes zone or rack selection transparent to applications deployed on Odin, Uber’s container orchestration platform. Crucially, a node and its replacement node are guaranteed to always belong to the same isolation group, even if the underlying physical host changes. In an ideal scenario where zone isolation is prioritized for isolation groups, multiple groups wouldn’t share a single zone. Therefore, distributing segment replicas across as many different isolation groups as possible inherently means distributing them across as many different zones as possible.

For Pinot to be zone failure resilient, we ensure that the number of isolation groups configured is greater than or equal to the table’s replication factor (that is, the number of replica groups). For most of our clusters, this means configuring at least two isolation groups to match the default replication factor of 2. This setup ensures that even if one zone goes down, we maintain another available isolation group, allowing the cluster to continue operating.

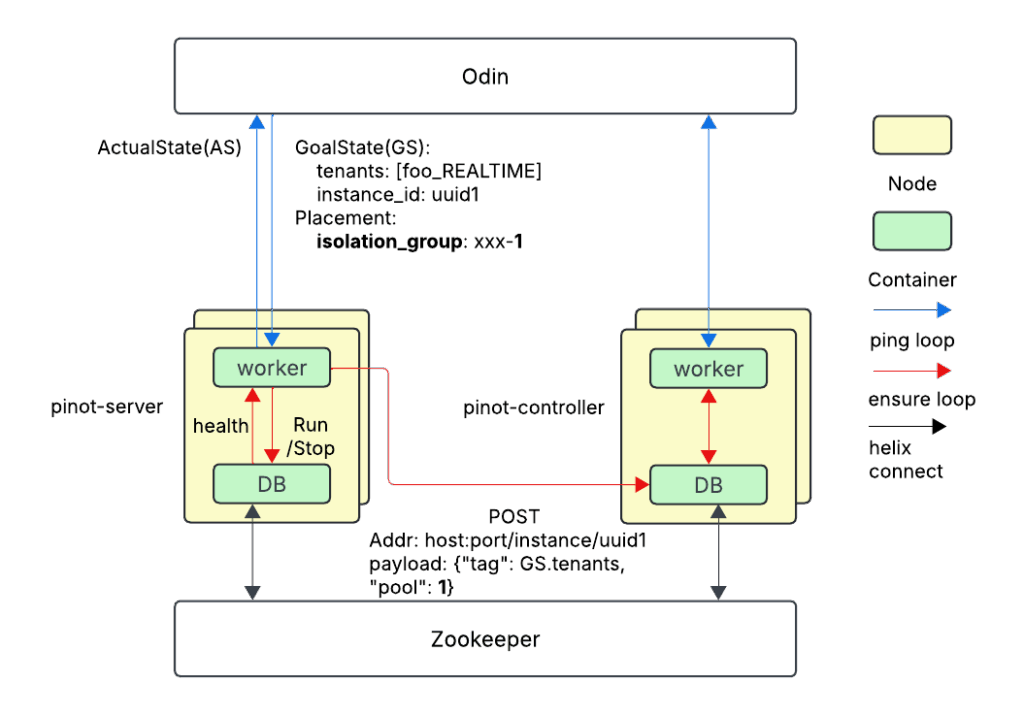

Automatically Registering the Pool

Each Node on Odin (akin to a Kubernetes® pod) has a local worker container, periodically running a job so as to ensure the database and other sidecar containers converge to the expected GoalState. Aside from the GoalState, the worker fetches placement information, including the isolation_group, which is determined by the centralized Uber Odin Scheduler. When a node is added onto the host by the host agent, the worker container attempts to start the database container. If the pinot-server database container is successfully spawned, the associated helix instance ID is already registered in Apache Zookeeper™. The worker sends a POST HTTP request to the pinot-controller with the query parameter of the instance ID and the payload including the pool number.

We use two replicas instead of three because we have two identical regional clusters. If a zone fails, a regional cluster has only one copy of the data, which can lead to slower performance. We’ll discuss our failure recovery plan in a future blog post.

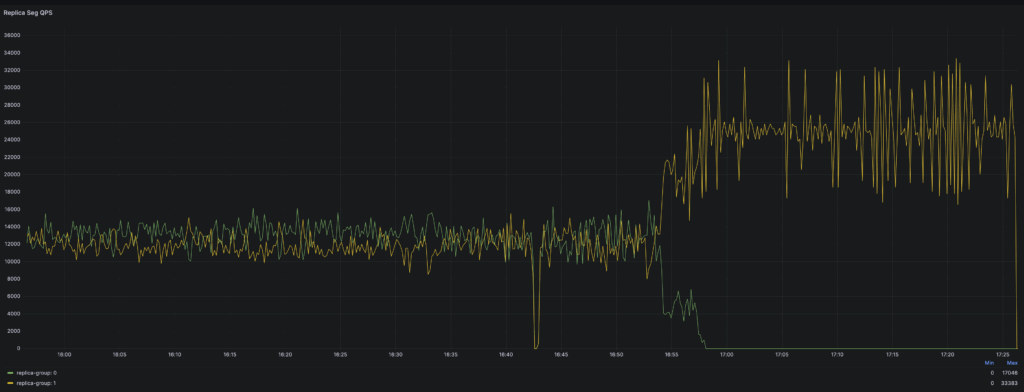

An internal drill proved that the other replica can continue serving the incoming traffic without query degradation when the single zone is completely down. As shown in Figure 3, when Isolation Group 0 is down, traffic routes to the other good replica-group in Isolation Group 1.

Migrating Pinot Clusters to Zone Failure Setup Seamlessly

At Uber, we manage 400+ Tier 0-1 Pinot clusters and thousands of tables. Migrating them to a zone-failure-resilient setup without causing any degradation was a significant challenge. The migration execution occurred in these steps:

- Pinot Odin worker release. Make the feature of automatic pool registration available for all Odin nodes.

- Backfill Odin isolation group and Pinot server pool. Use Odi- provided workflows to backfill the isolation group value per host, which guarantees the capacity of each isolation group is balanced within the same Odin cluster (which logically corresponds to a Pinot server tenant). Once isolation group values are backfilled, the Pinot Odin worker auto-registers the Pinot server pool. This transforms the abstract Odin zone concept into a Pinot server pool, which is subsequently used for data assignment.

- New tables onboarded to ZFR configurations by default. We have an internal metadata service that manages life cycle operations and table configurations across all Pinot clusters. As part of this, we added logic to automatically enable ZFR configurations by default for new tables.

- Backfill Pinot ZFR configurations and redistribute data. This is the final and most critical stage: backfill all relevant existing Pinot tables with the Pinot ZFR configurations and retrigger table rebalance to redistribute data honoring the new ZFR assignment strategies.

Pinot table configuration backfill is managed by the internal metadata service, which oversees the entire life cycle of Pinot tables. To facilitate migrating Pinot tables to ZFR configurations, we developed a suite of granular migration APIs, enabling migrations at various levels: per table, per server tenant, or per region. These APIs include auditing capabilities and allow for rollbacks if issues arise. After table configurations are migrated, a table rebalance is triggered to redistribute data tenant by tenant.

Our strategy focuses on rebalancing necessary tables only: upsert tables and those with retention longer than six months. For other tables, new data assignments naturally reflect the distribution as old segments are automatically purged after six months. This approach, combined with our internal regional traffic failover strategy as a mitigation tool, allows us to minimize data movement across servers and avoid causing performance degradation on live traffic.

Speeding Up Release Cycles with Isolation-Group-Based Policies

Beyond just fault resilience, the adoption of isolation groups has a direct and positive impact on our operational efficiency, specifically in accelerating release and restart processes. The new isolation-group-based claim policy and release pipeline allows for more aggressive, yet safe, parallel operations.

Isolation-Group-Based Claim Policy

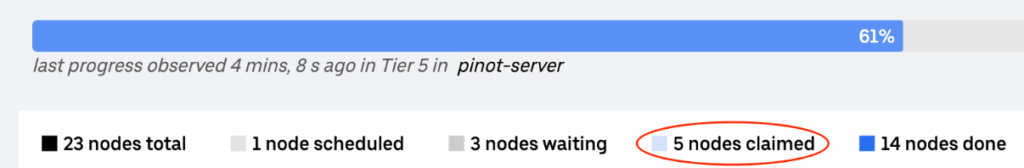

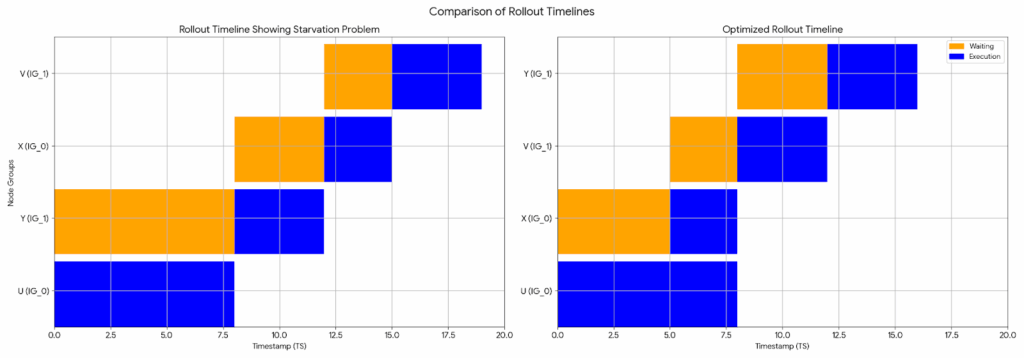

Previously, we’d only restart one node at a time to minimize risk. With the new policy, we can work on multiple nodes at the same time, as long as they’re in the same isolation group. This has significantly sped up our rollout process. For example, in a test with a 20 node cluster with medium disk size, increasing the number of parallel operations from 1 to 5 reduced the rollout time from 255 minutes to 88 minutes. When it comes to production environments, the rollout time is reduced from roughly a week to 1 day for the largest fleets that are created for the Uber internal logging use case. Figure 4 demonstrates the rollout parallelism with isolation-group-based claim policy.

The rate-limiting mechanism can be conceptualized as a distributed semaphore. Each node operation goes through Uber’s centralized Odin Accouter service. We perform the following hierarchical rate-limiting check:

- Only one isolation group can be worked on at a time in each Odin cluster (corresponding to a Pinot tenant).

- Each isolation group has at most X concurrent operations. The value X depends on the tenant size and the configurable up threshold.

In addition to rate limiting, the Odin Accounter service performs health checks on each technology. To prevent widespread query failures, we reject any node claims if we detect that a node in a neighboring isolation group is unhealthy. This proactive measure prevents a cascading failure that could take down the rest of the online replicas.

Isolation-Group-Based Release Pipeline

The default Odin release pipeline is unaware of the isolation group and randomly picks nodes into the release waiting queue when some nodes are rolling out. Because of this, while the rate-limiting policy speeds things up, the order in which isolation groups were rolled out was unpredictable and could be interleaved. This could cause a bad software update to affect all isolation groups and all data replicas. It could also lead to a starvation problem where some nodes wait longer than necessary, slowing down the overall release.

To fix this, we created a release pipeline based on isolation groups. In this new pipeline, each phase of the release focuses on nodes from a single isolation group, and the isolation groups are processed one after another. We also added a baking time after completing the rollout of the first isolation group to check for any performance issues.

Conclusion

Building zone failure resilience into Pinot has transformed how we operate our real-time analytics platform at Uber. By marrying Pinot’s pool-based instance assignment with Uber’s isolation groups, we’ve created a deployment model that naturally spreads data across zones, keeping queries and ingestion flowing even when a zone goes dark.

Rolling this out to hundreds of clusters was no small feat. Automated configuration management, granular migration APIs, and selective rebalancing let us strengthen resilience without disrupting live workloads—a balance that was essential for Tier-0 systems.

And the benefits don’t stop at reliability. Isolation group—based policies have also sped up our release cycles, enabling safe parallel rollouts and more predictable deployments, all while safeguarding performance.

With this foundation in place, we’re now turning our attention to the next challenge—failure recovery. In our next blog, we’ll dive into the strategies we use to recover from different failure scenarios, and how we keep Pinot running smoothly even in the most adverse conditions.

Acknowledgments

We’d like to thank Xin Gao, former Uber Staff Engineer, for implementing pool registration. We also acknowledge the Uber Odin Platform, which provided many out-of-the-box features that supported this project.

Cover Photo Attribution: “Quantum Dots with emission maxima in a 10-nm step are being produced at PlasmaChem in a kg scale” by Antipoff is licensed under CC BY-SA 3.0.

Apache®, Apache Pinot™, Pinot™, Apache Kafka®, Apache Zookeeper™, and Apache Flink® are registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries. No endorsement by The Apache Software Foundation is implied by the use of these marks.

The Grafana Labs® Marks are trademarks of Grafana Labs, and are used with Grafana Labs’ permission. We are not affiliated with, endorsed or sponsored by Grafana Labs or its affiliates.

Kubernetes® and its logo are registered trademarks of The Linux Foundation® in the United States and other countries. No endorsement by The Linux Foundation is implied by the use of these marks.

Stay up to date with the latest from Uber Engineering—follow us on LinkedIn for our newest blog posts and insights.

Si Lao

Si is a Staff Software Engineer on the Real-Time Data team at Uber. He works on building a highly scalable, reliable streaming data infrastructure at Uber, including Apache Kafka® and Pinot.

Christina Li

Christina is a Senior Software Engineer on the Real-Time Analytics team at Uber. Her work focuses on the Pinot query stack.

Xuanyi Li

Xuanyi is a Senior Software Engineer on the Real-Time Analytics team at Uber. His work focuses on building Pinot infrastructure and developing the Apache Pinot project.

Yang Yang

Yang Yang is a Senior Staff Engineer who leads Uber’s Cache Platform. Previously, she was on the Real-Time Data team building a highly scalable, reliable streaming data infrastructure at Uber, including Kafka and Apache Flink®.

Ujwala Tulshigiri

Ujwala Tulshigiri is a Sr Engineering Manager on the Real-Time Analytics team within the Uber Data Org. She leads a team that builds a self-served, reliable, and scalable real-time analytics platform based on Apache Pinot to power various business-critical use cases and real-time dashboards at Uber.

Posted by Si Lao, Christina Li, Xuanyi Li, Yang Yang, Ujwala Tulshigiri