Adding Determinism and Safety to Uber IAM Policy Changes

18 September / Global

Introduction

In Uber’s production environment, a vast network of microservices, databases, web domains, and other assets interact with each other. Identity and Access Management (IAM) policies primarily govern these interactions and the asset owners manage these policies. As the number of assets grows, so does the complexity of IAM policies and managing these policies effectively and safely without disrupting the production becomes a challenge.

The High Stakes of IAM Policy Changes

An incident occurred where an accidental IAM policy change by the asset owner on a critical gateway service caused Uber Eats customers to be unable to modify orders. This highlights how IAM policy changes, unlike code, can significantly impact systems, yet often lack sufficient testing and safeguards which code changes go through.

The Need for Policy Simulation

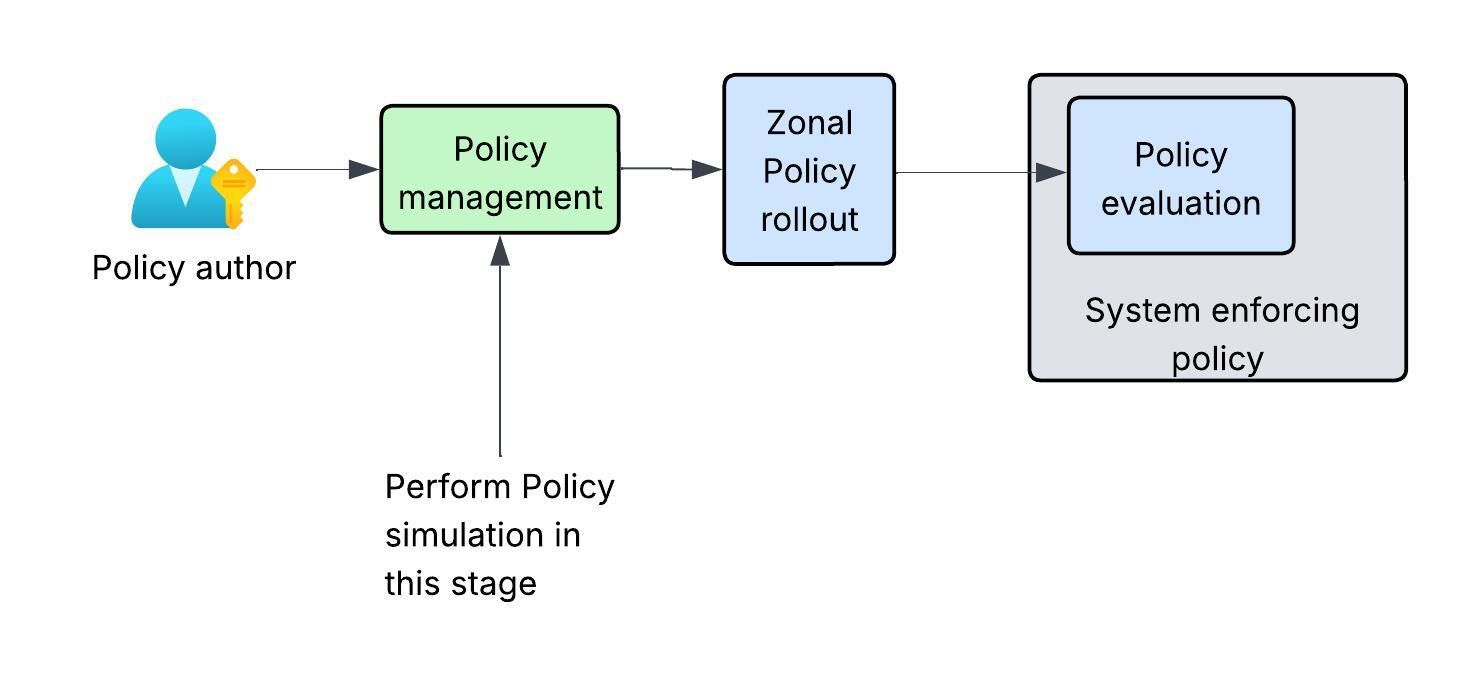

Incremental IAM policy deployment across availability zones has been widely adopted to contain such risks but it is not sufficient in the case of IAM policy changes. Misconfigured rollback triggers or specific traffic patterns during rollout can lead to undetected problematic policies being fully deployed, causing widespread outages.

To address this problem at an even early stage, we introduced a Policy Simulator. This tool helps policy authors preview the impact of their proposed changes in real time when the policy change is made. By understanding the exact effects of a policy change beforehand, authors can confidently deploy the change or choose to stop it.

At Uber, policy changes are made centrally via a portal called Unified Security Console. Uber employees make a large volume of policy changes every month manually through it. Of these changes, approximately 10% are grant removals, which can lead to an outage in production if not done correctly. A large portion of the remaining 90% of changes could unintentionally grant more privileges than intended. It therefore becomes crucial to assess the impact of a change before applying it.

This blog delves into the Policy Simulator tool and shows how it makes IAM policy changes safer.

Architecture

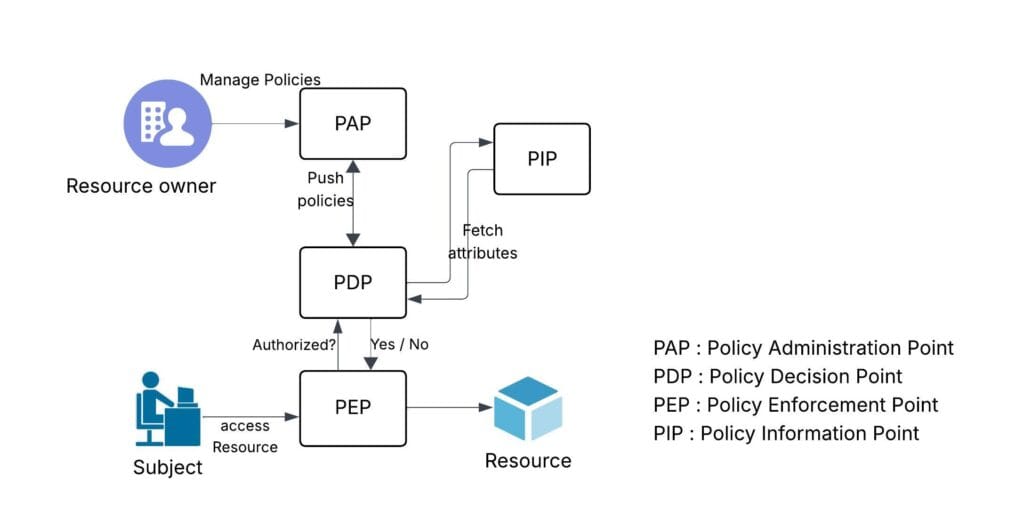

Authorization systems generally contain several key components. A PAP (Policy Administration Point) is where resource owners can create policies for their resources. Those policies are then pushed to a PDP (Policy Decision Point), which is the system responsible for evaluating policies at runtime. In some cases, the PDP may need to pull runtime attributes from external PIP (Policy Information Point) systems to make a final decision. When a subject attempts to access a resource, the access control system, called the PEP (Policy Enforcement Point), checks with the PDP to determine if the subject has the necessary authorization.

The IAM policies broadly fall into these categories:

- Data access policies, which control access to the data at rest.

- Network access, which controls access to services and endpoints.

- Web domains, which control access to Uber’s internal web domains.

- Custom business authorization, where each system can create custom policies to secure their business resources.

At Uber, these policies for the production environment are managed through a centralized management system. This is the PAP (Policy Administration Point) . These policies are distributed to the systems, where they’re evaluated and enforced on the runtime accesses. Depending on the policy being enforced, the PEP varies. For example, data access policies are enforced by storage access manager. The network access policies are enforced by an envoy present fleet-wide on Uber hosts or by the destination system using a library called authfx, built in-house.

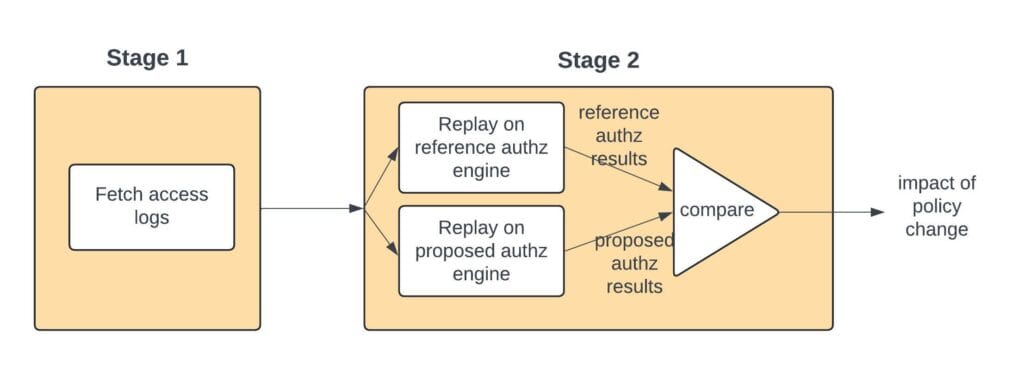

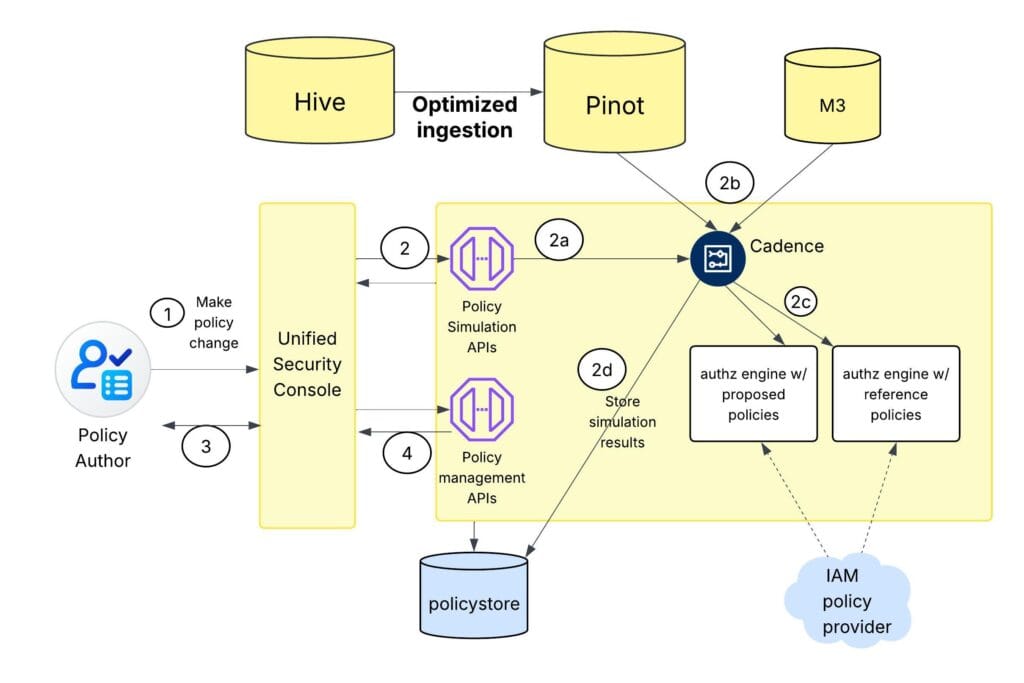

The policy simulation feature is built into the PAP service and involves 2 stages (see Figure 3).

In the first stage, we fetch access logs from different PEPs (Policy Enforcement Points). These access logs provide us with the subjects performing different actions on different target resources.

In the second stage, we replay the access logs on two local authorization engines. One is built with the reference set of policies as it currently exists on the PEP and another with the proposed set of policies. Since both of these authorization engines are built locally on the PAP service, no policies are deployed to any system outside where the simulation happens. The result of replaying the accesses on both these engines gives us the difference in the access-control results for the proposed change. This would be the impact of the policy change if deployed to the target system (PEP).

Next, we describe each of the stages and components in detail.

Stage 1: Fetching Access Logs

The goal of this stage is to fetch relevant access logs from the policy enforcement points where a policy change would deploy. The logs serve as input to the next stage, where the proposed policy change is evaluated against the given accesses.

Before discussing the details of how the access logs are fetched for policy simulation, here’s a short overview of how access logs are emitted at Uber from the target systems.

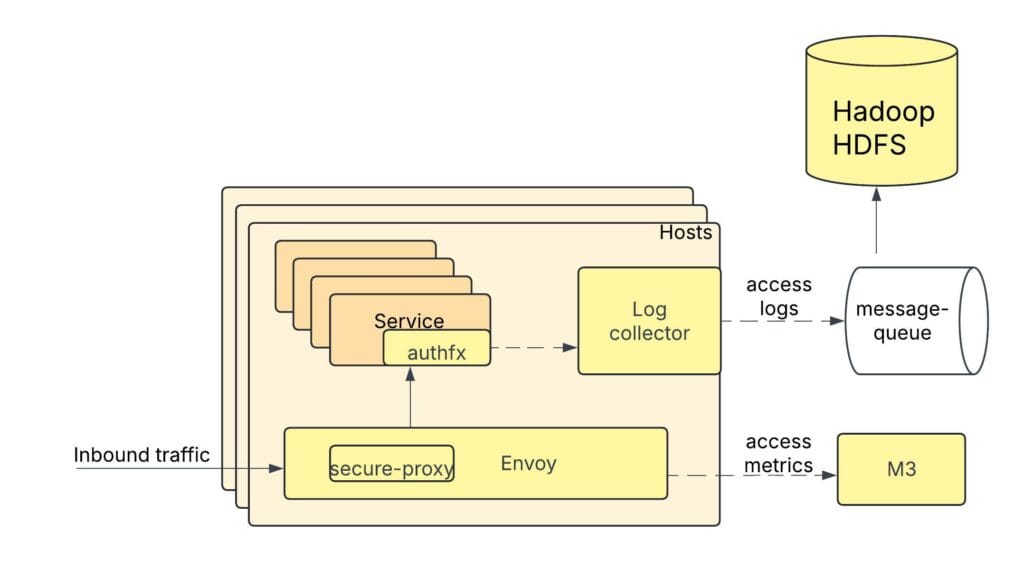

Let’s take the example of a common scenario: service-to-service access. When traffic is routed from one service to another, before reaching the destination service, the envoy proxy intercepts the traffic. Another component, secure-proxy, within Envoy, performs the security policy enforcement of the course-grained network access policies (service-level access-control policies). The secure-proxy emits a metric for the authorization enforcement into M3, Uber’s in-house metrics system.

In addition to authorization enforcement at secure-proxy, some services at Uber also perform authorization within the service. These policies protect finer-grained resources like endpoints within a service, business objects specific to the service. The authfx library, which the service uses for this policy enforcement, emits the access logs, which a log collector component collects and writes to an Apache Hive™ table via a message-queue. We store and use the last 30 days of access logs for fine-grained access and the last 90 days of accesses for course-grained access.

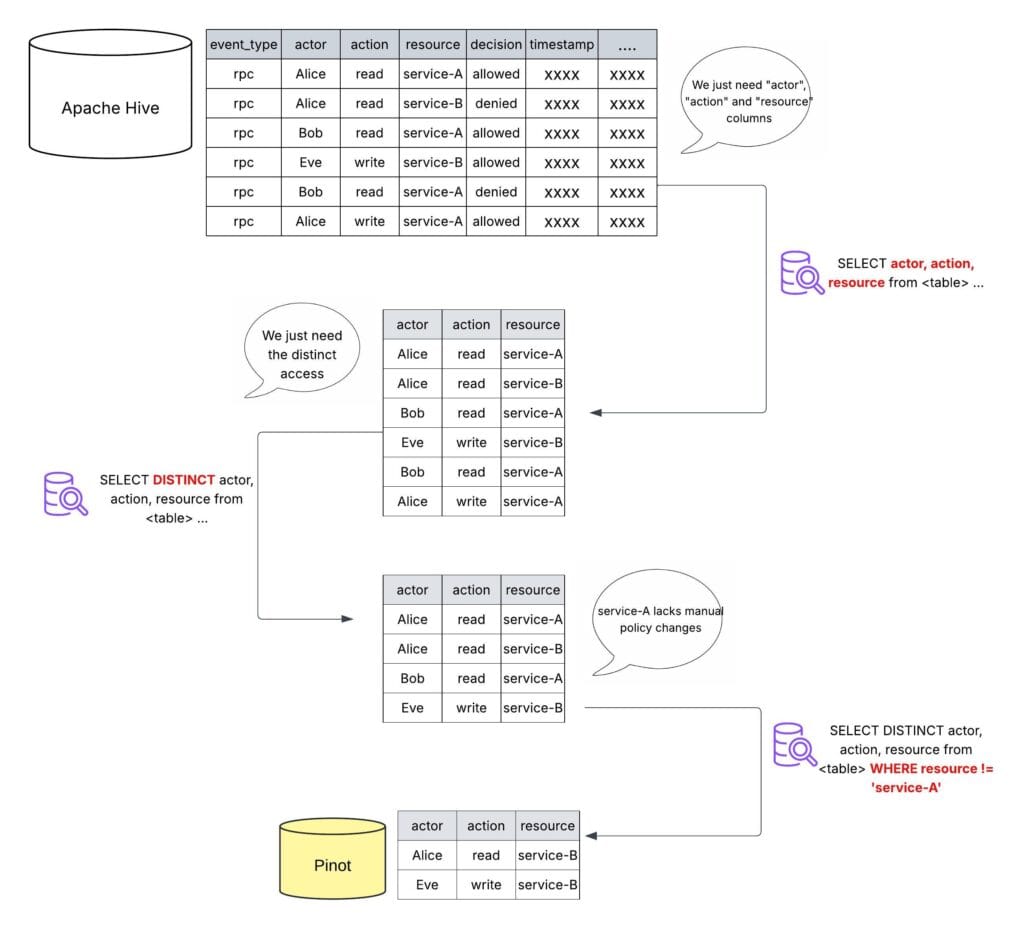

One of the goals of the policy simulation feature is to provide real-time impact analysis. That is, when a policy author makes a policy change, we want to perform the entire policy simulation in sub-minute intervals. Due to the high volume of traffic between services at Uber, access logs can be massive. Retrieving such large amounts of data from the Hive table in a matter of seconds isn’t possible. To address this problem, we ingest logs from a Hive table to an Apache Pinot™ table, which provides a low latency for fetch.

To ensure optimal performance and maintain the efficiency of our Pinot table, we’ve implemented a series of strategic optimizations within our ingestion pipeline. These optimizations prioritize relevant data, minimize redundancy, and exclude information that doesn’t directly contribute to the core functionality of our policy simulation.

Here are some of the implemented optimizations:

- Prioritization of key data. We extract and store only the essential components from the access logs—the actor, action, and resource. These elements provide the fundamental context necessary to understand and evaluate policy changes. Additional details, while potentially useful in other contexts, are omitted to optimize storage and query performance.

- Distinct occurrences. Rather than recording every instance of an access, we focus on capturing the unique combinations of actor, action, and resource. This approach acknowledges that the mere presence of an access within a given time frame is often sufficient for policy analysis. The precise frequency and timestamp of each access, while potentially relevant in specific scenarios, aren’t universally required and can be computationally expensive to store and query. By focusing on distinct occurrences, we streamline the data set and improve overall efficiency.

- Exclusion of irrelevant data. We’ve implemented a filtering mechanism to exclude access logs that don’t align with the core use case of policy simulation. Specifically, we discard logs for services that lack manual policy changes or operate exclusively with automated policy changes. By focusing on services with manual policy changes, we ensure that the data ingested is directly relevant to the core objective of evaluating the impact and safety of human-driven policy decisions.

Some of the optimizations are implicitly present in the metrics in the M3 system, which are used as access logs for policy simulation. The fetching of these metrics from M3 systems provides low response times, which are well within the requirements of policy simulation, eliminating the need for any further enhancements.

To optimize the fetching of access logs for policy simulation, we consider the nature of the policy change. For changes solely within the coarse-grained, service-level policies of the secure-proxy, we fetch only M3 access logs. If a policy change targets a specific service, we retrieve access logs exclusively for that service. We analyze the resources and actions impacted by the policy change and narrow down the access logs to those specific resources and their hierarchical counterparts. Based on the type of grant modified in the policy, we identify whether human actors or workloads are affected and fetch access logs accordingly.

Stage 2: Replaying Access Logs

The goal of this stage is to identify the accesses affected by the proposed policy change. To achieve this, we built two authorization engines. One subscribes to the same set of policies that the policy-enforcing service (PEP) subscribes to. This is called the reference authorization engine. Another subscribes to the same set of policies as the PEP and applies the proposed changes on top of it.

The accesses from stage 1 are replayed on both of these authorization engines. The reference authorization engine produces reference authorization results, which is a state of access-control which exists currently on the PEP. The proposed authorization engine produces proposed authorization results, which is the state of access-control which would exist on the PEP if the changes proposed by the author were to be rolled out. When we compare these authorization results, we see the impact of rolling out the proposed change to the policy enforcement point.

Note that replaying the access logs on the reference authorization engine is necessary to establish a baseline against which we can compare the proposed authorization results. Even though the access logs would have the authorization result, it’d reflect the result from a historic state of access policies, which may not be the same as the current set of policies.

Due to the scale of policy changes, many policy simulations need to run simultaneously. Each of these simulations involves numerous steps, and maintaining a state while triggering the correct events at the appropriate times is crucial for success. To achieve resilience and scalability in this logic, we designed it as a workflow on the Cadence workflow orchestration platform. Cadence provides the necessary durability, reliability, and fault tolerance to execute all steps within the replay logic accurately.

Figure 6 shows the complete process, from the moment a policy author initiates a policy change to the point where the updated policy is saved in the policy store.

- A policy author initiates a policy change within the USC (Unified Security Console).

- Before persisting the change, the USC uses Policy Simulation APIs to gauge potential impacts.

- A Cadence workflow is triggered to run the policy simulation, potentially alongside other concurrent simulations.

- The workflow pulls relevant access logs for the proposed change from Pinot, which are optimized for quick retrieval.

- Two authorization engines are created: one with current policies and one with the proposed change. Access logs are replayed on both to compare authorization results and identify differences.

- A report on the potential impact of the proposed policy change is generated and presented to the policy author. The policy author reviews the report and decides whether to proceed with or abandon the change.

- If the author proceeds, the USC calls the policy management API to save the change to the policy store, where it’s then rolled out to the enforcement system.

Use Cases at Uber

At Uber, policy changes are made centrally via a portal called Unified Security Console. We added the capability to perform policy simulations on policy changes here.

A typical IAM policy change life cycle consists of three stages:

- Policy change. The policy author creates or modifies the policy within the Unified Security Console. During this stage, if the change involves a grant removal, a background policy simulation (dry run) is automatically initiated. The author can either proceed with submitting the change or wait (approximately 30 seconds) for an access changes report, which details the potential impact on target systems.

- Policy change review. A designated reviewer, typically the policy namespace administrator, examines the proposed changes. If available, the access changes report from the previous stage is presented alongside the policy modification to aid in the review process and enhance confidence. The reviewer also has the option to refresh the dry run to obtain an updated access changes report, as the initial results may become outdated due to other policy changes affecting the same assets. Policy reviewers can select multiple pending policy changes and initiate a dry run to better understand the combined impact of all upcoming changes.

- Policy rollout. Once the reviewer approves the policy changes, the updated policies are deployed to the designated target systems.

This process of running policy simulations in both the policy change and policy change review stages is analogous to performing unit and integration tests during local development and within a CI/CD pipeline for code changes.

Comparison with Other Industry Solutions

Cloud providers such as AWS® (Amazon Web Services®) and GCP™ (Google Cloud Platform™) offer IAM policy simulators, each with subtle differences in their capabilities. The AWS IAM policy simulator is primarily used for testing and troubleshooting existing policies, but can also be used to test new policies. This allows administrators to confirm that their policies function as intended and find any unintended access that may have been granted.

In contrast, GCP’s IAM policy simulator, similar to our solution, is primarily focused on providing a functionality to preview the effects of proposed policy changes on past access attempts. This feature is essential for understanding the potential ramifications of policy modifications before they’re applied in a live environment. It allows administrators to assess whether the proposed changes would have blocked or allowed specific actions that were attempted in the past, providing a clear picture of the impact on security and access control.

Uber’s custom-built policy simulator is designed to seamlessly integrate with Uber’s Unified Security Console. This integration allows for streamlined testing of policy changes within Uber’s production environment, minimizing the risk of unexpected outcomes and ensuring that security policies are continuously aligned with the organization’s changing requirements.

While all three platforms offer IAM policy simulation, the depth and breadth of their functionalities differ. AWS focuses more on validating existing policies. GCP focuses more on previewing the impact of proposed changes. Uber’s solution integrates directly into Uber’s security console for a streamlined and efficient policy management workflow.

What’s Next

ABAC (Attribute-Based Access Control) policy evaluation requires fetching the necessary attributes at runtime and evaluating the policy based on the values of those attributes. The PAP (Policy Administration Point) where we have the policy simulation feature built may not have access to these attribute stores. We’ll explore ways to fetch the relevant attributes needed for simulating a policy change involving an ABAC policy so as to extend the policy simulation support to ABAC policies too.

Policy authors can manually inspect simulation results to determine the safety of a policy change using Policy simulation. However, we want to extend this capability by adding automation to the lifecycle and allowing the policy author to define a set of policy unit tests that must pass for each policy change. For example, if a service owner wants to ensure that an important caller is never removed, this can be encoded in the policy unit test, which can then run for every policy change.

Conclusion

A tool that can ensure the safety of policy changes is becoming increasingly essential as IAM policy enforcement expands to protect a growing number of assets in Uber’s production environment. Uber’s Policy Simulator tackles critical IAM policy management challenges by supplying real-time impact analyses of proposed changes before they are deployed. This proactive approach greatly improves the safety and predictability of policy rollouts, mitigating the risks of unintended outages and overly permissive authorizations.

The tool integrates with Uber’s Unified Security Console, streamlining policy management and enabling authors to make informed decisions with a clear understanding of potential consequences. Although there are limitations, specifically with ABAC policies, the Policy Simulator plays a crucial role in ensuring the stability and security of Uber’s complex production environment. In contrast to other industry solutions, Uber’s custom-built simulator offers a tailored approach that aligns with the organization’s specific needs and workflow, prioritizing seamless integration and efficient policy management.

Acknowledgments

Special thanks to Mithun “Matt” Mathew for his invaluable suggestions, which were instrumental in bringing this blog to fruition.

Cover Photo Attribution: “Cloud Security – Secure Data – Cyber Security” by Blue Coat Photos is licensed under CC BY-SA 2.0.

Apache®, Apache Hive™, Apache Kafka®, Apache Pinot™, and the star logo are either registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries. No endorsement by The Apache Software Foundation is implied by the use of these marks.

Amazon Web Services®, AWS®, and the Powered by AWS logo are trademarks of Amazon.com, Inc. or its affiliates.

Google Cloud Platform™ is a trademark of Google LLC and this blog post is not endorsed by or affiliated with Google in any way.

Stay up to date with the latest from Uber Engineering—follow us on LinkedIn for our newest blog posts and insights.

Avinash Srivenkatesh

Avinash Srivenkatesh is Senior Software Engineer on the Core Security Engineering team at Uber. He works on the unified authorization platform for Uber’s services and infrastructure.

Zi Wen

Zi Wen is a Software Engineer on the Core Security Engineering team at Uber. He works on the unified authorization platform for Uber’s services and infrastructure.

Zakir Akram

Zakir Akram is a Engineering Manager ll on the Core Security Engineering team at Uber. He manages the Unified Authorization Platform team for Uber’s services and infrastructure.

Posted by Avinash Srivenkatesh, Zi Wen, Zakir Akram