How Uber Serves over 150 Million Reads per Second from Integrated Cache with Stronger Consistency Guarantees

26 August / Global

Introduction

This is the second blog about the integrated cache infrastructure for Docstore, our online storage at Uber. In the previous blog, we presented CacheFront and outlined its core functionality, design principles, and architecture. In this blog, we share some exciting improvements we’ve implemented since then as we scaled up its footprint by almost 4 times.

CacheFront Recap

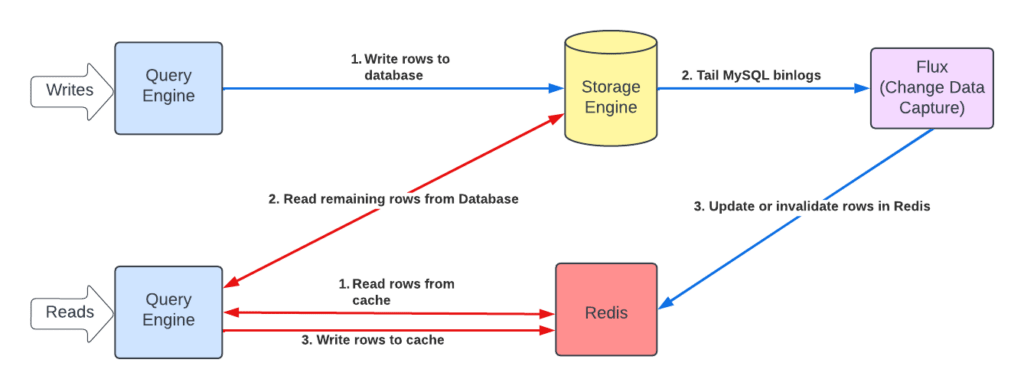

The previous blog has a detailed explanation of the overall Docstore architecture. For this blog, it’s sufficient to remember that CacheFront is implemented in our stateless query engine layer, which communicates with the stateful storage engine nodes to serve all read and write requests.

Reads

The reads are intercepted at the query engine layer to first try fetching the rows from Redis™. The rows that weren’t found in Redis are fetched directly from the storage engine and subsequently written to the cache to keep the cache warm. The read results are then merged and streamed back to the client.

Writes

Docstore generally supports two types of updates:

- Point writes: INSERT, UPDATE, DELETE queries that update a predefined set of rows, specified as the arguments of the DML query itself.

- Conditional updates: UPDATE or DELETE queries with a WHERE filter clause, where one or more rows can be updated based on the condition in the filter.

The writes aren’t intercepted at all, mainly because of the presence of conditional updates. Without knowing which rows are going to change or have changed as a result of running the DML query, we couldn’t know which cache entries require invalidation.

Waiting for rows to expire from Redis due to their time-to-live (TTL) value wasn’t good enough. So, we also rely on Flux, our change data capture (CDC) stateless service that, among its other responsibilities, tails MySQL binlogs to see which rows have been updated and asynchronously invalidates or populates the Redis cache, usually within a subsecond delay. When invalidating, instead of deleting from Redis, Flux writes special invalidation markers to replace whatever entries are currently cached.

Challenges

As the scale of our CacheFront deployment increased, it became clear to us that there was a growing appetite for a higher cache hit rate and stronger consistency guarantees. The eventually consistent nature of using TTL and CDC for cache invalidations became a blocker for adoption in some cases. Additionally, to make caching more effective, engineers were tempted to increase the TTL for extending the lifespan of the rows in the cache. This, in return, resulted in a higher amount of stale/inconsistent values served from cache, and the service owners weren’t happy about it.

These challenges were as old as the idea of caching itself. To reiterate from the previous blog:

“There are only two hard things in Computer Science: cache invalidation and naming things.”

– Phil Karlton

The recent improvements we’ve made to CacheFront have allowed us to prove Phil wrong—showing that it’s possible to achieve both. The rest of this blog describes our journey.

Reasons for Inconsistencies

In CacheFront the inconsistencies manifest as stale values, where the value served from the cache is outdated. That is, its timestamp is older than the value that could have been read from the underlying database.

There are several possible reasons for having stale values:

- Racing cache fills: In the presence of concurrent reads and writes of the same row, different query engine workers might attempt to cache different values for the row (depending on how these reads and writes interleave in time). This isn’t a problem in CacheFront since we use a Lua script to deduplicate new entries written to Redis with those that are currently cached. Our previous blog explains in more detail how we avoid caching stale values through the use of row timestamps.

- Cache invalidation delays: Since the Flux tailer is an async process, it has some inherent delay in invalidating or populating the cache. A write to the database with a quick subsequent read served by the cache may return the previously cached value, violating the desired read-own-writes guarantee. Once Flux catches up, it overwrites the stale value and fixes the problem. The inconsistencies might stay longer in cases when Flux restarts, for example, due to ongoing deployments, rebalancing of workers, or stream reconnections due to storage topology changes.

- Cache invalidation failures: A Redis node can be temporarily sluggish, unresponsive, or unreachable, causing the invalidation to not go through, despite multiple retries attempted. This leaves the cache inconsistent with the database until the row is finally evicted due to TTL expiration. The higher the TTL, the longer the inconsistency remains and the longer the stale values might be served.

- Cache refills from followers: If, upon a cache-miss, the cache is refilled by reading the row from a lagging follower node which didn’t yet have the chance to apply all the recent writes from its leader—a stale entry might be cached. CacheFront allows choosing the preferred cache-refill policy, creating a tradeoff:

- Refilling from the leader node for stronger consistency guarantees, or

- Refilling from followers, freeing up the leader, resulting in better read load distribution

Regardless of the cause, the inconsistencies typically manifest as:

- Read-own-writes inconsistency: A row that is read, cached, and then overwritten might still return the older stale value on subsequent reads, until it’s either finally invalidated or expired from cache.

- Read-own-inserts inconsistency: Similarly, when negative caching is enabled (that is, caching the absence of a row in the underlying database), as long as the negative entry is present in cache, the reads would return a “not-found” result for the row, possibly breaking assumptions of the service’s business logic. This type of inconsistency is typically more visible to service owners.

Magnitude of Cache Staleness

By now it’s probably obvious that the duration of inconsistencies, which the upstream callers can observe, is always bounded by the value of the TTL expiration that’s being used, and Flux only helps to shorten it. For this reason, the default TTL value we recommend when onboarding to CacheFront is just 5 minutes. Exceptions can be made if there’s a valid justification, and typically we leave this decision to the service owners, since staleness directly affects business. The desired TTL value to use on a cache refill due to a cache-miss can be specified via an optional header when making read requests to Docstore.

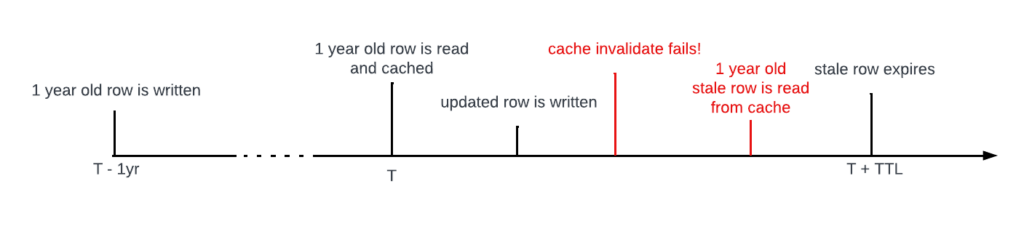

But there’s a more subtle and probably not widely understood problem underlying the case of cache invalidation failures. Even if the TTL being used is short, it’s still possible to observe a pretty high staleness value, if measured as the delta between the timestamp of the row stored in the database and the timestamp of the row currently cached. In fact, it’s theoretically unbounded … Why?

Imagine a write of some row to the database, which happened a whole one year ago. Then at time T, which is now, a read-modify-write is performed, and since the initial read is a cache miss, the row is read from the database and thereafter cached. But now suppose that when the modified row is written back to the database, the subsequent Flux invalidation just fails to go through. If the same row is read back again within the timespan of [T, T+TTL] – the one-year-old row will be returned on every read request. If, in an attempt to increase cache hit rate, the TTL used by some service owner was increased to one hour, then we’re at risk of returning a one-year-old value for the entire next hour! Unbounded, indeed.

Changes to Conditional Updates Flow

To recap, the main reason the cache couldn’t be invalidated synchronously with the write requests was because of conditional updates—it wasn’t possible to know which rows were updated in the transaction and needed invalidation. Through gradual and continuous improvements to our storage engine layer over the last few years, we modified the writes flow so it could provide us with the actual set of rows that got updated as part of each transaction.

Two design principles in particular enabled us to achieve that:

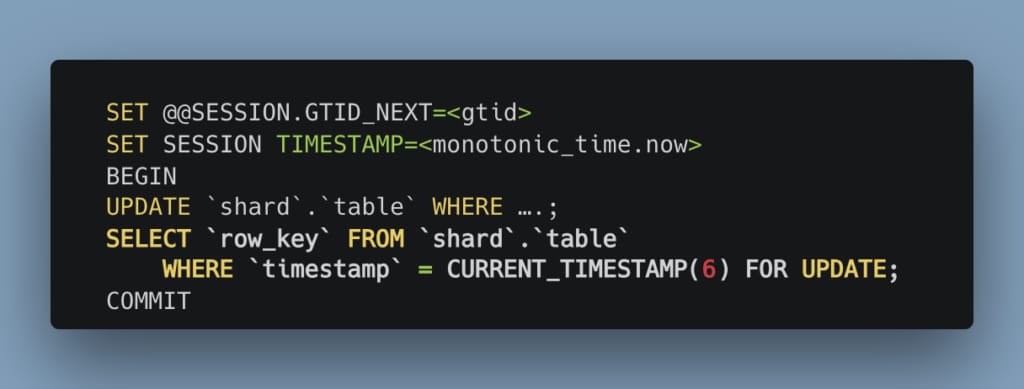

- First, we made sure all deletes are soft, setting a tombstone on the row. Those tombstoned rows are garbage-collected later by an async deletion job.

- Second, we switched to using strictly monotonic time for allocating MySQL® session timestamps (at microseconds precision), making them unique within a storage node and the Raft group it belongs to.

Now, with the guarantees that even deleted rows are still internally visible, and that each transaction can be uniquely identified through its session timestamp – we could select all rows that were updated within this transaction. When rows are updated, we set their update timestamp column to the transaction’s current, unique, and monotonic timestamp. Then, just before issuing a COMMIT, we read back all row keys that were modified within the transaction (including deletes, which are performed by updating a tombstone field rather than deleting the row). This is usually a very lightweight query, since at this point the data is anyways cached in the MySQL storage engine, and we also always maintain an index on the timestamp column for all our tables:

This approach enabled us to figure out exactly which rows have changed as part of conditional updates and return their keys back to the query engine. The example shown above is a slightly over-simplified version of the actual query in use. There’s a bit of additional secret sauce which allows us to combine multiple different types of update and delete queries within the same client-side-driven shard-local transaction, but the general concept is the same.

Note that point writes don’t require this kind of logic, since the to-be-updated row keys are anyway known as part of the update query arguments, so we can just return them as is.

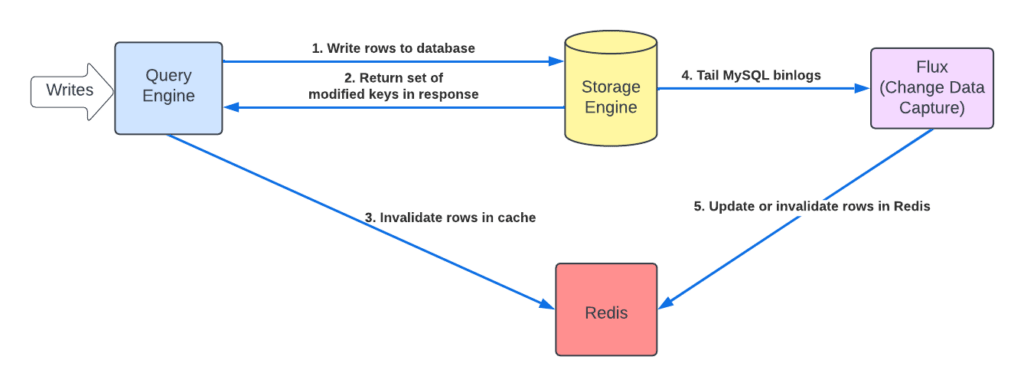

Improving Cache Invalidation Logic

Now that we could tell which rows were changed within each write transaction, we could improve our CacheFront invalidation logic. We intercepted each write API in the query engine layer, registering a callback that is invoked when a request to the storage engine returns. As part of the response to a write request, the storage engine was modified to also return the set of row keys affected by the transaction as well as the associated session timestamp. From this callback, we could now invalidate any previously cached entries in Redis, overwriting them with invalidation markers.

In the background, we still kept Flux running, tailing MySQL binlogs and asynchronously doing cache-fills. Having three different ways of populating and/or invalidating the cache—via TTL expirations, from Flux tailer, and now also directly from the write path of the query engine layer—proved to be a superior approach in terms of consistency.

There are few things worth noting about this new invalidation flow. Invalidations can be performed either synchronously or asynchronously. Synchronously means that the cache invalidation is done within the context of the request, before returning the status to the calling client. This adds a bit of extra latency to write requests, but is better suited for read-own-writes and read-own-inserts flows. Note that if the request succeeded but the corresponding cache invalidation failed, we still return a success status to the client (that is, we don’t fail the write request).

Asynchronously means that cache invalidation requests are queued to run outside the client request context. This avoids the additional extra latency to write requests, but provides slightly weaker consistency guarantees.

By also returning the session commit timestamp of the transaction from the storage engine, we could correctly deduplicate between the cache-fill entries and invalidation markers generated by the query engine and Flux tailer, respectively. Additionally, this allowed us to deprecate and completely remove the dedicated API mentioned in the previous blog, which we previously recommended for doing explicit cache invalidations of point writes. Since the invalidation timestamps were artificially generated using the current clock, subsequent cache fills weren’t succeeding, causing more cache misses than expected, up until the invalidation markers expired from the cache. Using the correct row timestamps, generated by the database in the storage engine layer, was paramount to attaining higher cache hit rates. On top of that, automatically invalidating cache on any type of write and without having to do that ever explicitly was a big win usability wise.

Finally, it’s worth mentioning that caching is more suitable for read-heavy use cases, where the reads-to-writes ratio is typically 20 or even 100. The extra cache invalidations we introduced were driven by write QPS and hence didn’t significantly increase the overall load, and, wherever needed, we could just easily upscale our query engine layer and/or Redis clusters to absorb the additional overhead.

Cache Inspector

“You can’t improve what you can’t measure.”

– Peter Drucker

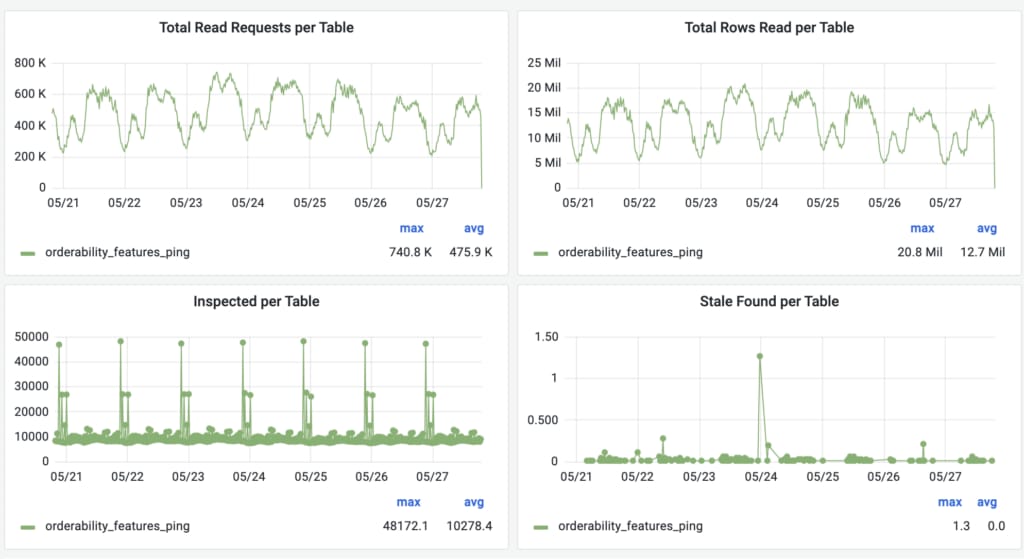

To measure the current cache staleness, quantify improvements, find potential bugs, or allow us to reason about whether the TTL can be further increased, we’ve built a system called Cache Inspector. It’s based on the same CDC pipeline, Flux, which tails the same MySQL binlogs with an induced delay of one minute (to let things stabilize after the writes). Instead of invalidating or populating the cache, the tailer compares the values obtained from the binlog events with those currently stored in the cache. It then exports metrics such as the number of entries inspected, the number of stale entries found, the per-table mismatch rate, histogram of staleness observed, and more.

As can be seen from the image above, the number of stale values detected in a span of week is completely negligible compared to the total number of rows written to or read from the orderability_features_ping table. The new cache invalidation flow provided us with much stronger consistency guarantees, and the addition of Cache Inspector allowed us to measure and compare its efficiency. This, in turn, allowed us to increase the TTL for this table all the way to 24 hours, pushing the cache hit rate above 99.9%!

Conclusion

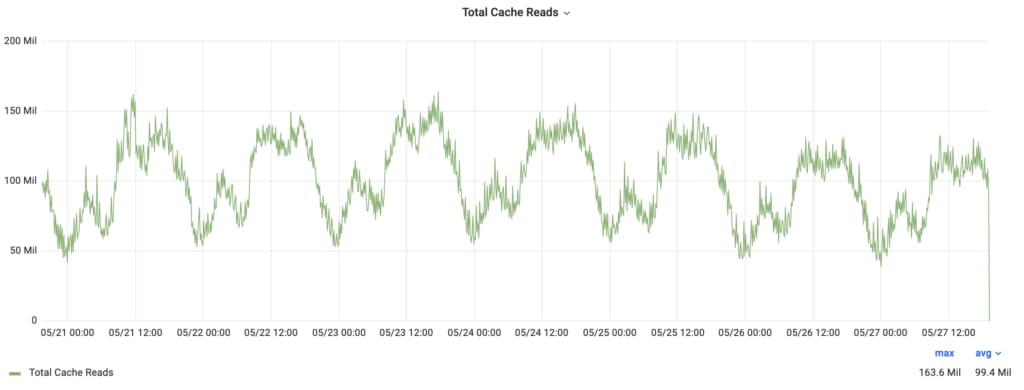

As of today, during peak hours, CacheFront is serving more than 150 million rows per second. Through years of continuous improvements, we’ve added many features to CacheFront to make it better and stronger:

- Adaptive timeouts, negative caching, pipelined-reads—for driving low latencies

- Sharding, cross region replication, and cache warming—for improving resilience

- Lua scripts, TTLs, and invalidations through CDC pipeline—for improving consistency

- Compare-cache mode and Cache Inspector—for observing and measuring staleness

- Circuit breakers—for dealing with unhealthy nodes

- Connection rate limiters—for preventing connection storms

- Compression—for reducing memory footprint, network bandwidth, and CPU utilization

With the addition of automatic cache invalidations on writes to strengthen CacheFront’s consistency guarantees—we think we’ve achieved a truly state-of-the-art integrated caching infrastructure at Uber.

If you like challenges related to distributed systems, databases, storage, and cache, please explore and apply for open positions here.

Cover Photo Attribution: Time-lapse Photography of Brown Concrete Building by zhangkaiyv

Oracle, Java, MySQL, and NetSuite are registered trademarks of Oracle and/or its affiliates. Other names may be trademarks of their respective owners.

Redis is a trademark of Redis Labs Ltd. Any rights therein are reserved to Redis Labs Ltd. Any use herein is for referential purposes only and does not indicate any sponsorship, endorsement or affiliation between Redis and Uber.

Stay up to date with the latest from Uber Engineering—follow us on LinkedIn for our newest blog posts and insights.

Eli Pozniansky

Eli Pozniansky is a Sr Staff Engineer in the Core Storage team at Uber. He’s the tech lead and one of the main developers of both CacheFront and Flux, responsible for leading the design, development, and rollout to production of both projects from their inception to serving hundreds of millions of cache reads per second.

Preetham Narayanareddy

Preetham Narayanareddy is a Staff Software Engineer in the Core Storage team at Uber. He’s worked on the design and implementation of many Docstore and CacheFront features and is focused on creating customer-centric products that enhance user experiences.

Posted by Eli Pozniansky, Preetham Narayanareddy