PerfInsights: Detecting Performance Optimization Opportunities in Go Code using Generative AI

22 July / Global

Introduction

At Uber, back-end service efficiency directly influences operational costs and user experience. In March 2024 alone, the top 10 Go services accounted for more than multi-million dollars in compute spend alone —an unsustainable amount that underscored the need for systematic performance tuning.

Traditionally, optimizing Go services has required deep expertise and significant manual effort. Profiling, benchmarking, and analyzing code could take days or even weeks. Performance tuning is prohibitively expensive and non-trivial for most teams.

PerfInsights was born as an Uber Hackdayz 2024 finalist and has since evolved into a production-ready system that automatically detects performance antipatterns in Go services. It uses runtime CPU and memory profiles with GenAI-powered static analysis to pinpoint expensive hotpath functions and recommend optimizations.

The results have been transformative. Tasks that once required days now take hours. Engineers can now deliver high-impact performance improvements without needing specialized knowledge of compilers or runtimes.

What distinguishes PerfInsights is its emphasis on precision and developer trust. Beyond identifying optimization opportunities, PerfInsights validates them using large language model (LLM) juries to reduce hallucinations and increase confidence in its suggestions. With hundreds of diffs already generated and merged into Uber’s Go monorepo, PerfInsights has turned optimization from a specialist endeavor into a scalable, repeatable practice.

How It Works

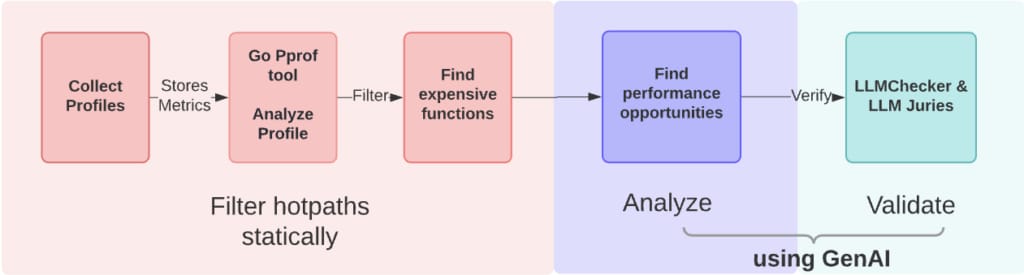

PerfInsights’ optimization pipeline consists of two main stages: profiling-based function filtering and GenAI-driven antipattern detection. Together, these components surface high-impact optimization opportunities with minimal developer effort.

Filtering Hotpath Functions

PerfInsights leverages CPU and memory profiles from production services using Uber’s daily fleet-wide profiler during peak traffic periods. For each service, it identifies the top 30 most expensive functions based on flat CPU usage. This is based on the observation that the top 30 most expensive functions account for the majority of CPU usage. Additionally, if runtime.mallocgc—the Go runtime’s memory allocation function—accounts for more than 15% of CPU time, which means the runtime is spending a lot of time allocating memory, so PerfInsights also analyzes memory profiles to uncover potential allocation inefficiencies.

To focus the analysis, PerfInsights applies a static filter that excludes open-source dependencies and internal runtime functions. This step trims noise from the candidate set, ensuring downstream analysis focuses only on service-owned code that’s most likely to benefit from optimization.

Detecting Antipatterns with GenAI

At the core of PerfInsights’ detection engine is a curated catalog of performance antipatterns, informed by the Go Foundations team’s past optimization work. These patterns reflect the most common sources of inefficiency encountered across Uber’s Go services and align closely with best practices from Uber’s Go style guide. They include issues such as unbounded memory allocations, redundant loop computations, and inefficient string operations.

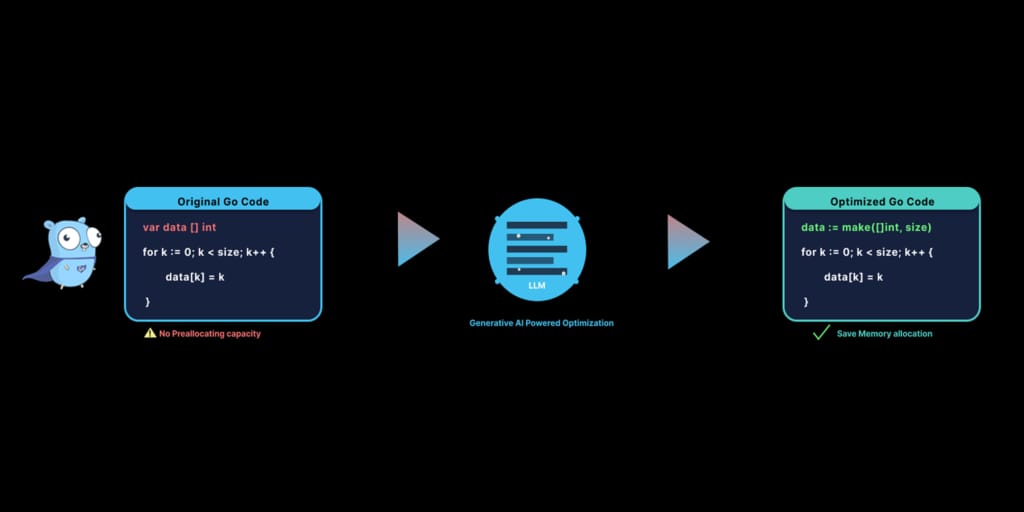

PerfInsights applies a two-stage detection process. Once hotpath functions are identified, PerfInsights passes their full source codes and a list of antipatterns to a large language model (LLM) for analysis. By combining profiling context with pattern awareness, the model can pinpoint inefficient constructs with high precision. For example, if a function appends to a slice without preallocating capacity, the LLM flags the behavior and recommends a more performant alternative.

To boost confidence in its findings, PerfInsights layers two forms of validation: LLM juries and LLMCheck. LLMCheck is a framework designed to catch false positives by running through several domain-specific rule-based validators. LLMCheck also logs metrics on detection accuracy, tracking failure rates and signaling potential model drift. This dual-validation strategy has dramatically improved precision, reducing false positives from over 80% to the low teens.

Antipattern and Detection Strategy

In our initial attempts, a single-shot LLM-based antipattern detection produced inconsistent and unreliable results—responses varied between runs, included hallucinations, and often generated non-runnable code. To address this, we first improved reliability by scoring only based on detected antipatterns. We then introduced several targeted prompt strategies to enhance accuracy.

Few-Shot Prompting

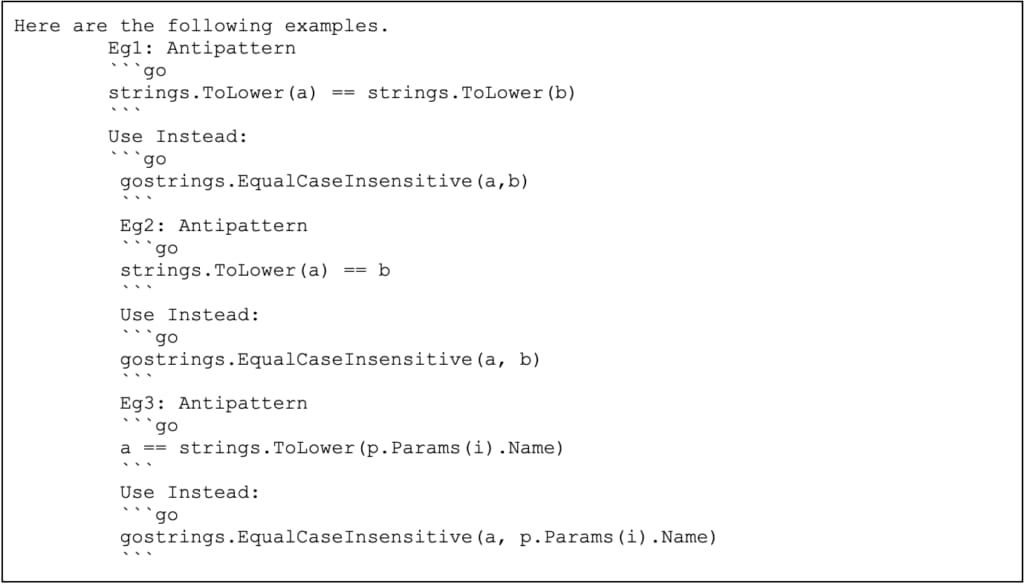

Few-shot prompting involves including a handful of illustrative examples in the prompt, enabling the GenAI model to generalize more effectively to new or less familiar cases and deliver more accurate results.

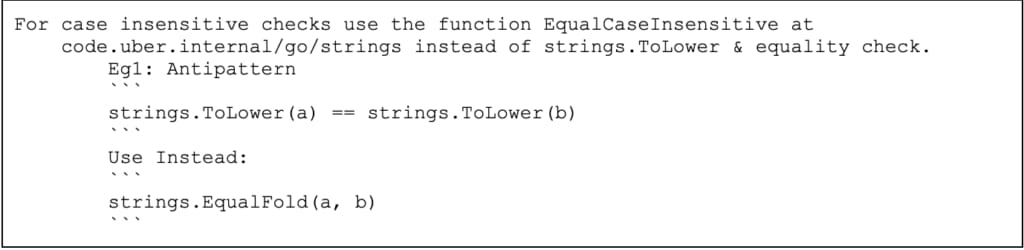

Here’s an example of prompt tuning to fix inaccuracy in detection:

Antipattern: Use strings.EqualFold(a,b) over strings.ToLower(a)== string.ToLower(b) for more efficient for case-insensitive comparison.

Issue: No case-insensitive string comparison was present in the code but was detected.

Fix: Add few-shot prompting.

Old prompt:

New prompt with few-shot examples:

Tailoring the Model’s Role and Audience

Specifying the audience and the model as Go experts helps the LLM focus its responses on advanced, relevant details, making its answers more accurate and appropriate for expert-level users.

Ensuring Output Quality

Another prompt strategy is asking the model to test its results for reliability and ensure the antipattern suggested fixes are runnable.

Writing Clear and Focused Prompts

These prompt writing tactics make the LLM understand instructions correctly, avoid confusion, and preserve context:

- Use very specific, positive instructions for improved reliability (avoid using “don’t” in instructions)

- Use one prompt per antipattern to conserve context and break down complex tasks into simpler ones

- Separate prompts for detection and validation

- Incentivize and penalize the model for correct/incorrect answers, respectively

Confidence Scores

Asking for confidence levels in the LLM’s response for each prompt makes the model think more.

Validation of LLM Responses

A core strength of PerfInsights lies in its robust validation pipeline, which includes two complementary systems: LLM juries and LLMCheck. Together, they dramatically reduce false positives and increase trust in the system’s optimization suggestions.

LLM Juries

Rather than trusting a single model’s judgment, PerfInsights leverages a jury of large language models to validate each detected antipattern. These models independently assess whether an antipattern is present and whether the suggested optimization is valid. This ensemble approach mitigates common hallucinations such as incorrectly detecting loop invariants or misinterpreting control structures.

LLM Checker

Even with LLM juries, LLM may still hallucinate, such as:

- Detecting antipatterns that don’t exist

- Confusing maps with slices and vice versa

- Loop Invariant detected, but the variable is outside of the loop

- Identifying loop variables in the for statements as loop invariants

PerfInsights employs a second layer of verification via LLMCheck by running through several domain-specific rule-based validators to evaluate LLM responses. There are several benefits:

- Non-generic: Evaluates highly specific, conditional projects.

- Extendable: Adds validators for various LLM-based projects.

- Standardized metrics: Tracks reductions in LLM response errors during prompt tuning.

For example, ensuring that an identified loop invariant isn’t mistakenly located outside the loop.

The final output includes a confidence score of the function’s optimisability and suggested improvements. These insights are fed into downstream tools for code transformation or manual review by developers. As a result, PerfInsights transforms static profiling data into actionable engineering outcomes within minutes.

Impact and Results

Since its launch, PerfInsights has transformed performance improvements at Uber. By reducing detection time from days to hours and removing the need for deep language expertise, PerfInsights has accelerated engineering velocity and driven scalable compute cost reductions across our Go services. PerfInsights seamlessly integrates with automated downstream tasks, with validated suggestions flowing directly into Optix, Uber’s continuous code optimization tool. This has already produced hundreds of merged diffs, measurably improving performance and generating meaningful cost savings. Further, PerfInsights’ power lies in its language-agnostic design, allowing it to read, understand, and optimize functions across various programming languages.

Boosting Code Health

Our static analysis tool has proven highly effective in improving code quality, with its findings validated through LLMCheck. In February, we averaged 265 validated detections, reaching a single-day high of 500. By June, the figure had dropped to 176—a 33.5% reduction in just four months.

Fewer antipatterns mean a cleaner codebase, shorter review cycles, and faster, safer releases. We will keep refining both the scanner and LLMCheck to drive this number even lower and sustain our commitment to exceptional code quality.

Engineering Effort Saved

Our performance‑analysis tool turns what used to be months of niche, manual diagnostics into hours of automated insight, freeing engineers to build rather than troubleshoot.

Previously, uncovering performance antipatterns demanded immense, specialized effort. For instance:

- 5 critical antipatterns once required two engineers (including a Principal Engineer) for a full month (∼320 hours).

- 11 unique antipatterns consumed a four-person Go expert team for a full week (∼160 hours).

- A dedicated Go expert spent six months full-time on related optimization projects (∼960 hours).

These manual efforts alone represent over 1,400 hours for just a handful of cases.

Over four months, the number of antipatterns reduced from 265 to 176, a sustained reduction of 89 antipatterns. Projecting this annually, that’s a reduction of 267 antipatterns. Addressing this volume manually, as the Go expert team would have consumed approximately 3,800 hours. By using our streamlined tool, we reduced the engineering time required to detect and fix an issue from 14.5 hours to almost 1 hour of tool runtime—a 93.10% time savings.The impact goes beyond dollars saved. PerfInsights has also elevated engineering rigor. Dashboards powered by LLMCheck provide teams with visibility into detection accuracy, error patterns, and antipattern frequency. This transparency has helped cut hallucination rates by more than 80%, boosting trust in AI-assisted tooling.

Most importantly, PerfInsights has redefined performance tuning as a continuous, data-driven discipline. Its integration into CI/CD pipelines and day-to-day developer workflows means optimization opportunities are surfaced regularly, not just when something breaks. What was once an expert-led, reactive task is now a proactive loop embedded across Uber’s engineering life cycle.

Lessons Learned

Building PerfInsights into a production-ready system wasn’t just a technical challenge—it was a lesson in integrating GenAI tooling into a complex, high-scale engineering ecosystem. What worked wasn’t just novel modeling techniques, but a relentless focus on developer experience, reliability, and iteration speed.

Prompt Engineering and Model Selection Matter

Early iterations suffered from noisy, inconsistent outputs. The Go Foundations team learned quickly that high input token limits were critical for passing large Go functions without truncation. More importantly, small adjustments in prompt phrasing and contextual cues dramatically influenced accuracy. By encoding explicit antipattern definitions and Go-specific idioms into the prompts, we improved detection precision and reduced false positives by 80%.

Static Filtering Is the Unsung Hero

Before any GenAI magic happens, PerfInsights performs aggressive static filtering using CPU and allocation profiles. By isolating just the top 30 flat% functions within service boundaries—and ignoring OSS or non-relevant runtime functions—we constrained the search space. This pre-processing transformed what could have been a brittle AI prototype into a focused optimization assistant that works effectively across services without overwhelming developers with noise.

Validation Pipelines Build Trust

To move beyond demos, we needed to give developers confidence in PerfInsights’ recommendations. With LLMCheck dashboards tracking detection accuracy, false positive reasons, and model regressions, we could quantify improvement and respond to feedback with evidence. As a result, PerfInsights became not just usable, but dependable.

Developers Respond to Clear Wins

Landing the first 5 digits saving diff was a breakthrough moment. Engineers saw that this wasn’t theoretical—it worked! That early success helped unlock adoption, feedback loops, and ultimately made PerfInsights a part of the engineering toolkit.

Conclusion

PerfInsights marks a turning point in how we approach performance engineering at Uber—from slow, expert-led investigations to scalable, GenAI-assisted optimization. By fusing real-world production data with targeted static analysis and validated LLM workflows, we’ve built a system that delivers meaningful performance wins quickly and reliably.

This shift has already paid dividends: freeing up developer time, lowering compute costs, and expanding access to performance best practices across teams. Tools like PerfInsights show the power of applying GenAI with precision, domain knowledge, and a bias for automation. These aren’t just theoretical benefits—they’re actively improving our systems and driving measurable impact today.

Stay up to date with the latest from Uber Engineering—follow us on LinkedIn for our newest blog posts and insights.

Lavanya Verma

Lavanya Verma is a Software Engineer on the Development Platform team at Uber.

Ryan Hang

Ryan Hang is a Senior Software Engineer on the Go Platform team at Uber.

Sung Whang

Sung Whang is a Staff Software Engineer and Tech Lead Manager of the Go Platform team at Uber.

Joseph Wang

Joseph Wang serves as a Principal Software Engineer on the AI Platform team at Uber, based in San Francisco. His notable achievements encompass designing the Feature Store, expanding the real-time model inference service, developing a model quality platform, and improving the performance of key models, along with establishing an evaluation framework. Presently, Wang is focusing his expertise on advancing the domain of generative AI.

Posted by Lavanya Verma, Ryan Hang, Sung Whang, Joseph Wang